Updated: March 25 2025 09:45A new

research paper by Parshakov et al. (2025) reveals an intriguing psychological phenomenon: people actually prefer content created by large language models (LLMs) over human-written content—until they discover it was AI-generated.

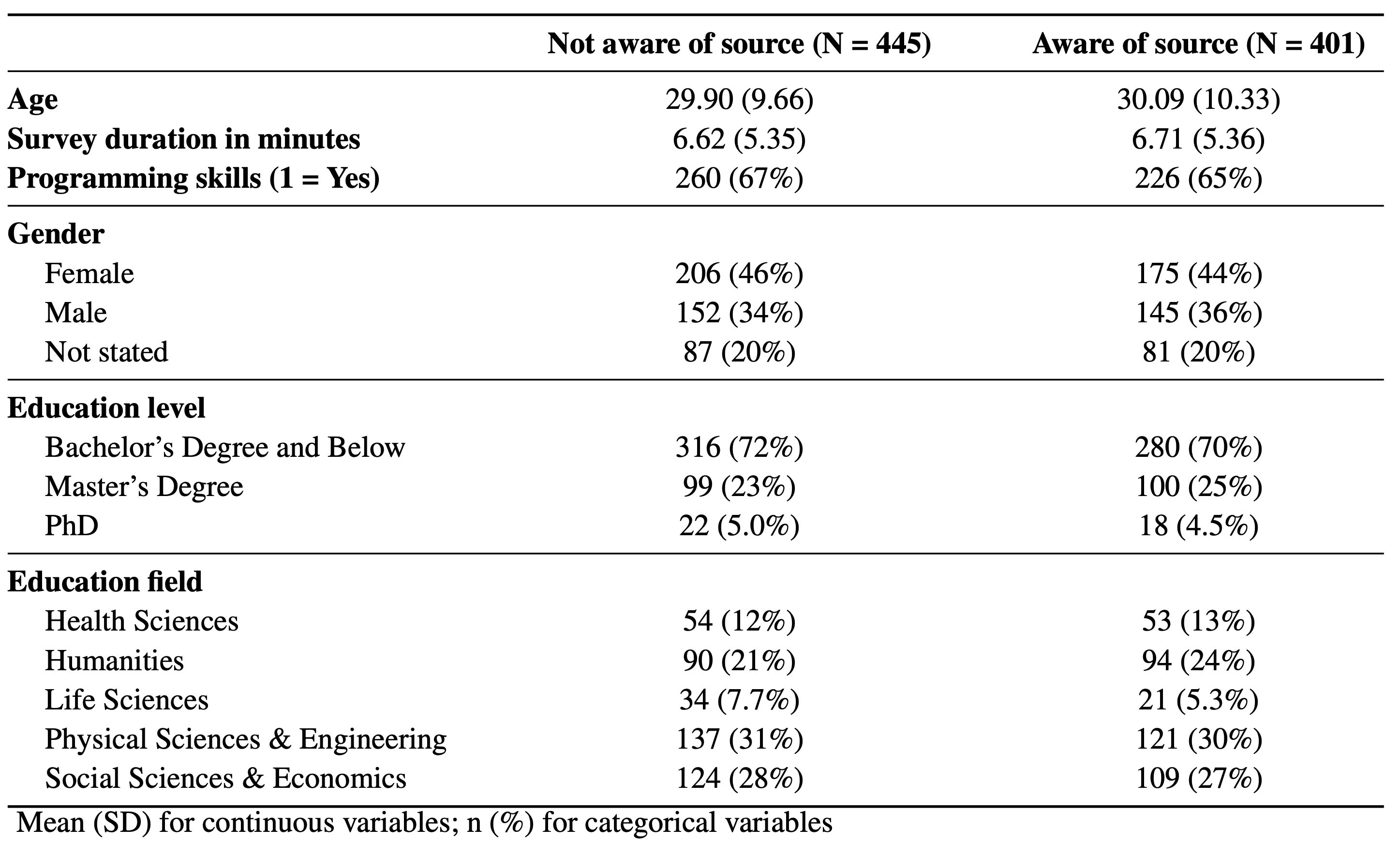

The study conducted by researchers from HSE University and the University of Stavanger presents compelling evidence that challenges our assumptions about content preferences. In a controlled field experiment, participants were shown responses to popular questions from platforms like Quora and Stack Overflow, with the responses coming from either humans or AI systems like ChatGPT, Claude, Gemini, and Llama.

The results were striking: overall, participants showed a clear preference for AI-generated responses across multiple domains including Physical Sciences, Life Sciences, Health Sciences, Social Sciences, and Humanities.

The Revelation Effect: How Knowledge Changes Perception

While the general preference for AI content might seem surprising, the study's most fascinating finding was how this preference changed when participants learned the source of the content.

When informed that a response was AI-generated, participants' preference for that content diminished significantly. This suggests that our evaluative judgments about content quality are strongly influenced by knowing its origin—not just by the actual quality of the content itself.

This phenomenon highlights what psychologists call an "attribution bias"—our tendency to evaluate the same information differently based on its presumed source. When we know content comes from AI, our perception shifts, often leading us to view it more critically or with greater skepticism.

How Demographics Influence AI Content Perception

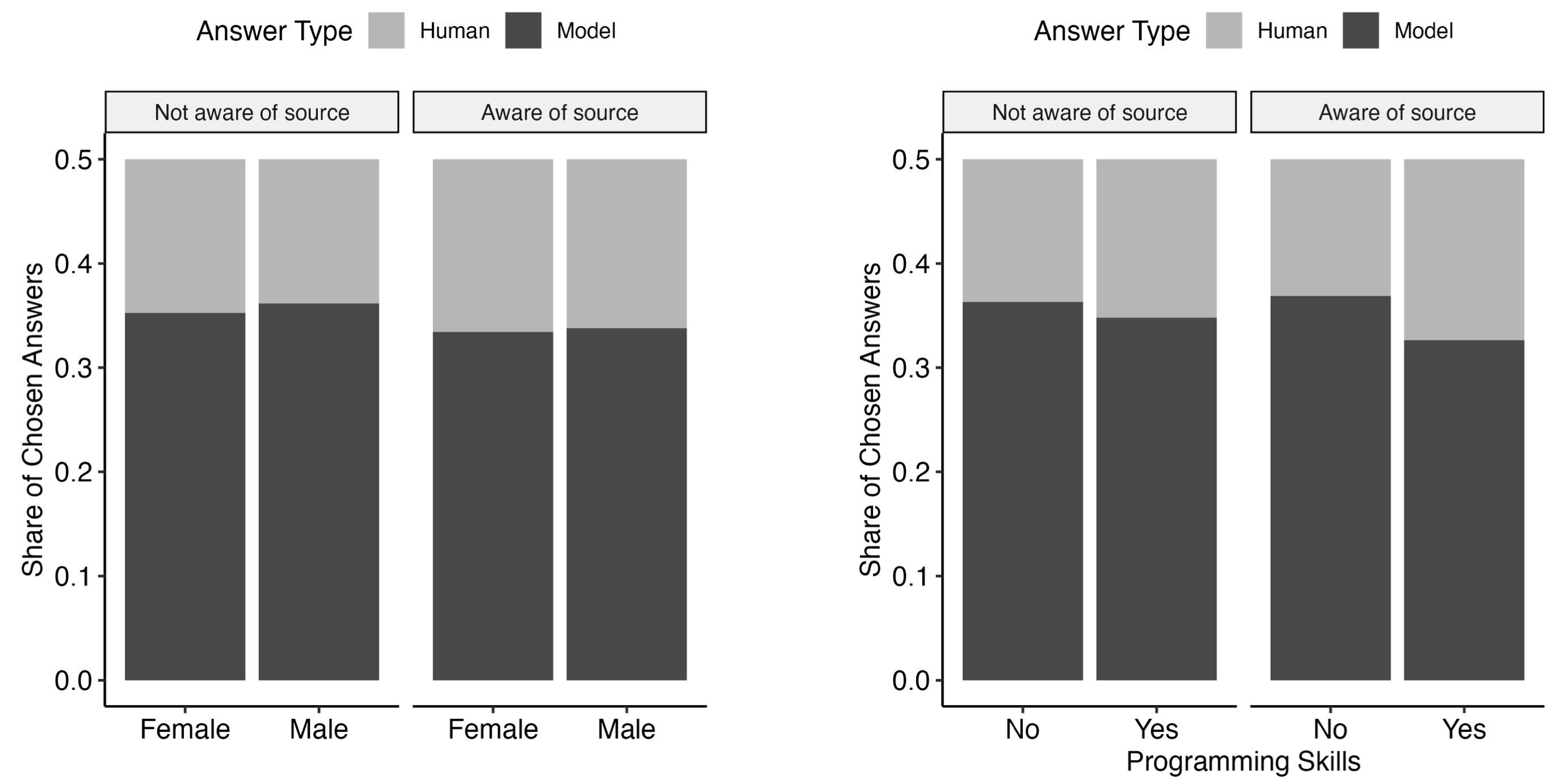

The study uncovered interesting variations in how different demographic groups responded to AI-generated content:

- Gender differences: Women demonstrated a stronger preference for human-generated responses compared to men, regardless of whether they knew the source of the content. However, men showed a significant shift toward preferring human content when they learned about its AI origin.

- Technical expertise: People with programming skills were more likely to prefer human-generated responses when aware of the source, suggesting that technical knowledge may influence perceptions of AI capabilities.

- Age factors: Interestingly, older participants were slightly less likely to prefer human-generated answers, potentially indicating different generational attitudes toward AI-created content.

- Subject matter variation: In fields like Humanities and Life Sciences, awareness of AI-generated content led to a significant negative preference for that content. However, this effect wasn't observed in Physical Sciences, Engineering, or Social Sciences.

The Length and Style Factor

One notable aspect that might contribute to the preference for AI content is the difference in length and style between human and AI-generated responses. According to the study, AI-generated texts averaged between 1,854 and 2,265 characters, while human responses averaged 1,515 characters (or about 265 words).

This pattern aligns with findings from other research showing that AI systems often produce longer, more comprehensive responses than humans. The detailed nature of AI responses might create an impression of thoroughness and expertise that influences user preferences.

As the researchers note, "The findings on text-length differences between human and AI-generated texts align with those reported by

Karinshak et al. (2023)," suggesting this is a consistent pattern in how AI systems communicate compared to humans.

The Future of Human-AI Content Perception

As AI systems continue to evolve and become increasingly integrated into our information ecosystem, how might our perceptions change?

The research suggests several potential future trends:

- Normalization Effect: As exposure to AI-generated content increases, the bias against it may gradually diminish, leading to more objective evaluations based on quality.

- Quality Convergence: The distinction between human and AI content may become increasingly difficult to detect, potentially reducing the impact of source knowledge.

- Evolving Evaluation Criteria: We may develop new frameworks for evaluating content that acknowledge the complementary strengths of human and AI creation.

- Industry Adaptation: Content platforms and publishers may develop new best practices around disclosure and attribution that balance transparency with user experience.

The researchers emphasize that "transparency regarding content origin plays a critical role in shaping evaluative judgments," suggesting that how we communicate about AI involvement will remain crucial. For those working with AI content tools or consuming AI-generated information, this research offers several valuable insights:

- Focus on quality first: The inherent quality of content remains important regardless of source—AI or human.

- Consider your audience: Different demographic groups and interest communities may respond differently to AI content disclosure.

- Context matters: The appropriate approach to AI content creation and attribution varies by field and purpose.

- Embrace augmentation: Human-AI collaboration may offer the best of both worlds—quality content with human oversight.

- Develop nuanced disclosure practices: Finding balanced approaches to transparency that acknowledge AI contributions without triggering negative biases.

As AI content generation tools become increasingly sophisticated and ubiquitous, understanding these psychological dynamics will be crucial for creators, platforms, and users alike. The challenge moving forward will be developing approaches that allow us to benefit from AI's content creation capabilities while addressing the psychological barriers that can limit their acceptance.

Recent Posts