Updated: March 23 2025 21:27In a presentation at

NVIDIA GTC 2025, Sarah Laszlo, head of the machine learning platform at Visa, pulled back the curtain on how one of the world's largest payment processors has engineered its software infrastructure to thrive in the generative AI era. Visa's journey offers valuable insights for any organization looking to leverage AI while managing finite hardware resources and stringent data security requirements.

The Scale of Visa's Data Challenge

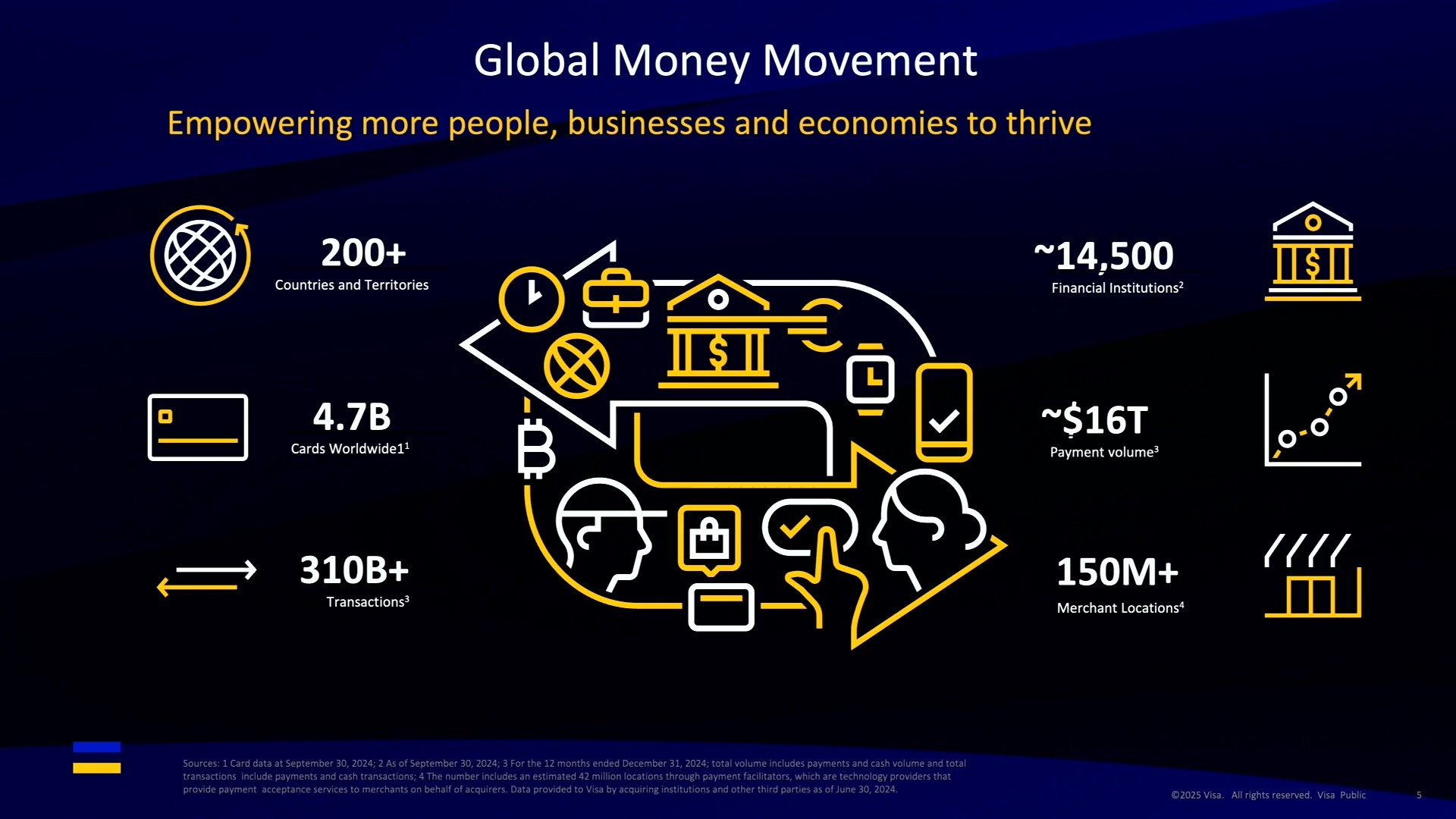

To appreciate

Visa's AI innovations, we must first understand the massive scale at which they operate:

- Processing up to 76,000 transactions per second

- Operating at 99.99% availability

- Serving over 4.5 billion Visa cards

- Supporting 130+ million merchant locations across 200+ countries

- Preventing more than $27 billion in fraud annually

This transaction volume creates a uniquely valuable dataset that spans decades. Unlike the text and image data that powers many AI systems, Visa's data is mixed-type, non-syntactic, and tabular - presenting both extraordinary opportunities and unique challenges.

AI Principles as the Foundation

At Visa, all AI work is guided by five core principles:

- Security - Payment data safety is paramount

- Control - Both Visa and users maintain control over data

- Value - Data is used only when it benefits stakeholders

- Fairness - Models must be accurate, explainable, and safe

- Accountability - Every employee must uphold these principles

These principles, particularly the commitment to security, directly influence Visa's technology stack. To maintain the highest levels of data security, Visa handles sensitive data exclusively in their own data centers rather than in the cloud. This creates a fundamental constraint: finite hardware resources that cannot be frequently refreshed or easily scaled.

Three Software Innovations That Power Visa's AI

Facing these constraints, Visa has developed innovative software solutions to maximize the performance of their GPU fleet. Laszlo highlighted three key innovations:

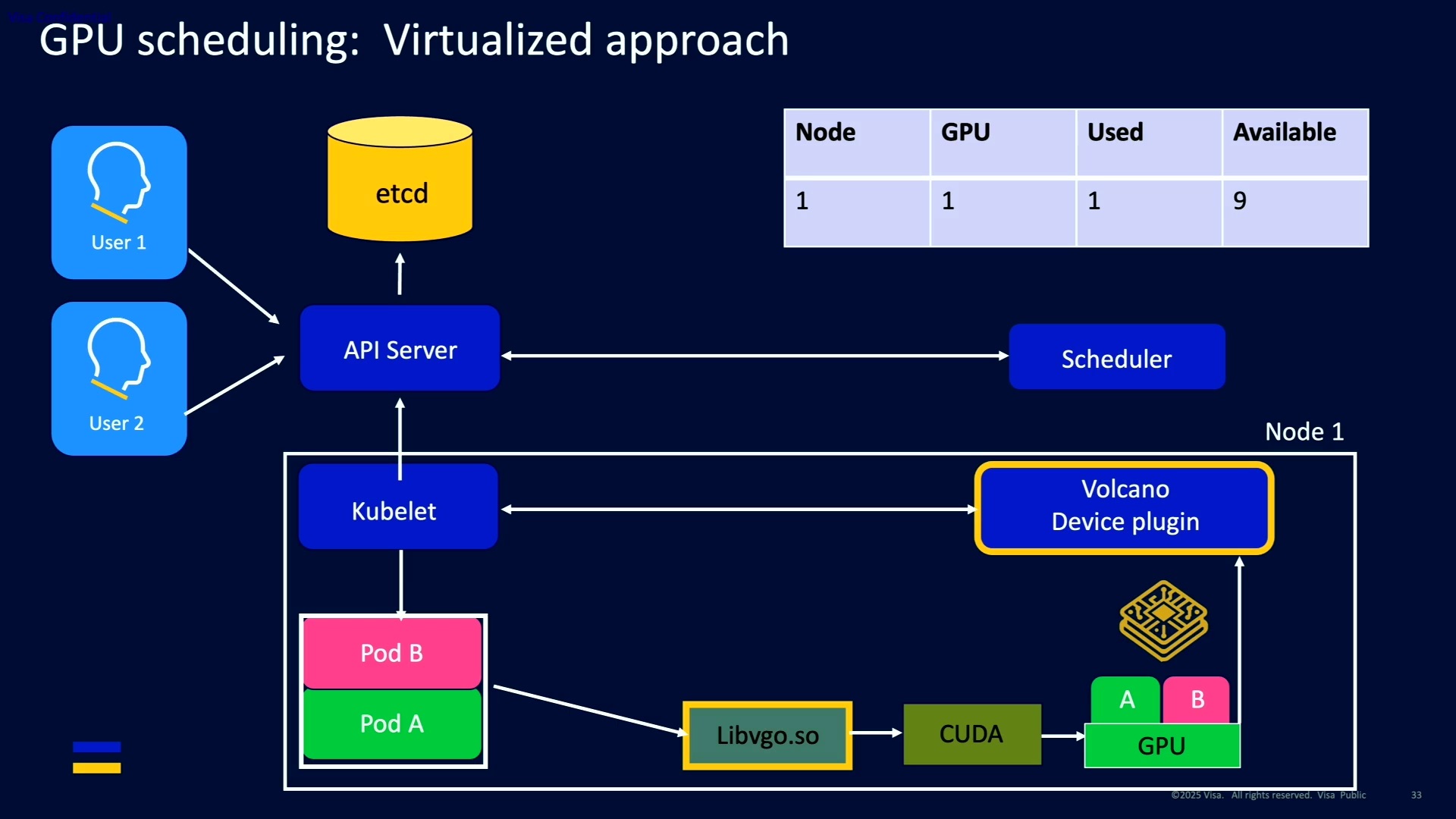

1. Virtual GPU (VGPU) Solution

Visa noticed that many users, particularly those running Jupyter notebooks, would claim an entire GPU but use only a fraction of its capacity. This led to a situation where teams would report being unable to get GPU resources despite their overall GPU quota never being fully utilized.

The solution was implementing virtual GPUs, allowing users to provision as little as one-tenth of a physical GPU. This increased the number of notebooks that could run on GPUs by a factor of 10, dramatically improving utilization. Technically, this was achieved by:

- Using a fork of the open-source Volcano library

- Implementing a Kubernetes-compatible mechanism to isolate memory and compute between processes

- Creating a transparent experience where users could select VGPU instances the same way they would select any other instance type

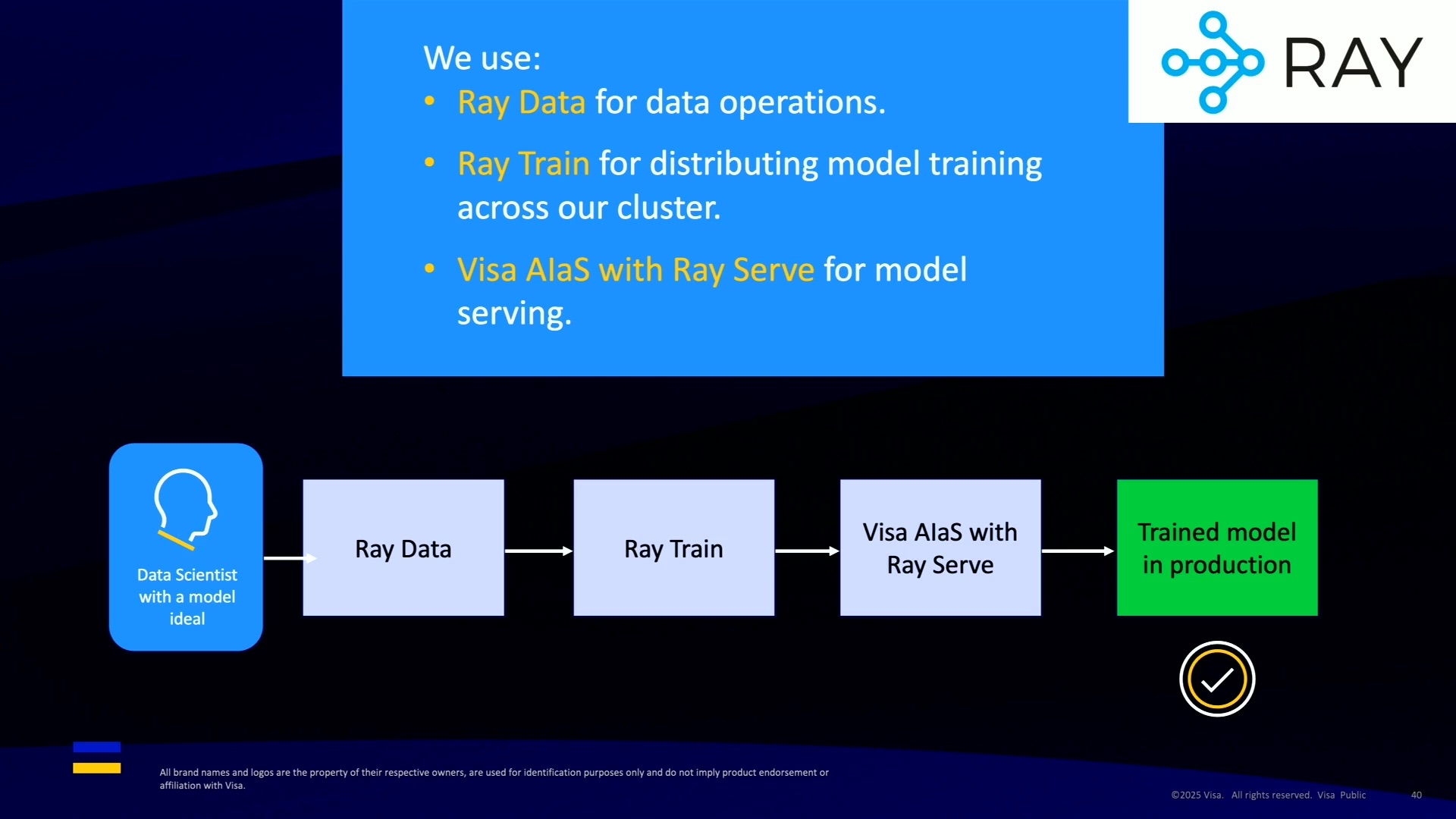

2. Ray Framework Adoption

Visa observed friction at the boundaries between different tools in their AI/ML workflow. Data scientists were using separate tools for data conditioning (Spark), model training (Dask), and model serving (various options).

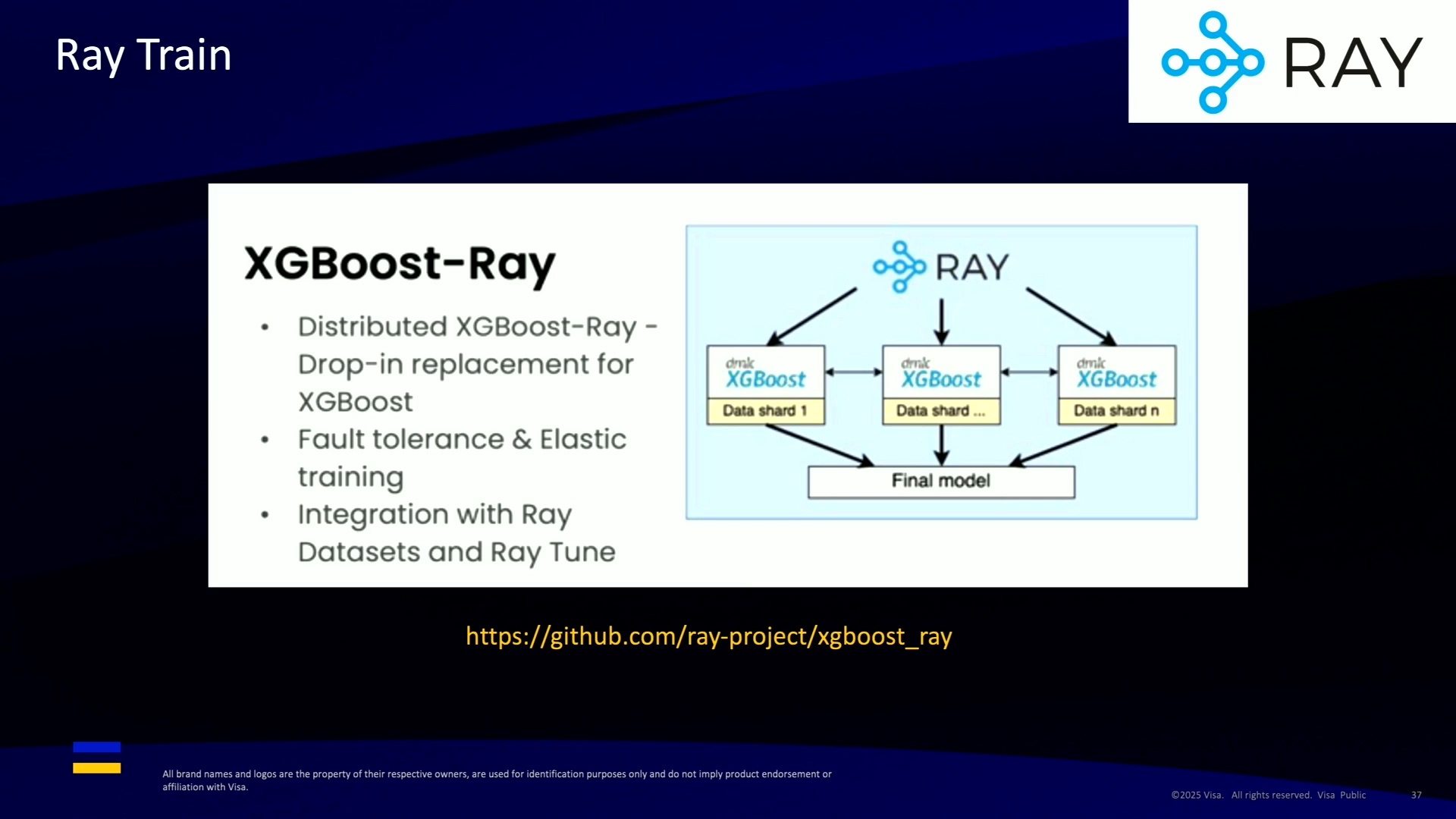

To streamline this process, Visa adopted the

Ray framework across their entire ML stack:

- Ray Data for data operations

- Ray Train for distributed model training

- A custom variant of Ray Serve (called ACE Serve) for low-latency model serving

The results were remarkable, particularly for

XGBoost models, which train approximately 8x faster when distributed over a pod of GPUs. What used to take hours now takes minutes, significantly improving the development workflow.

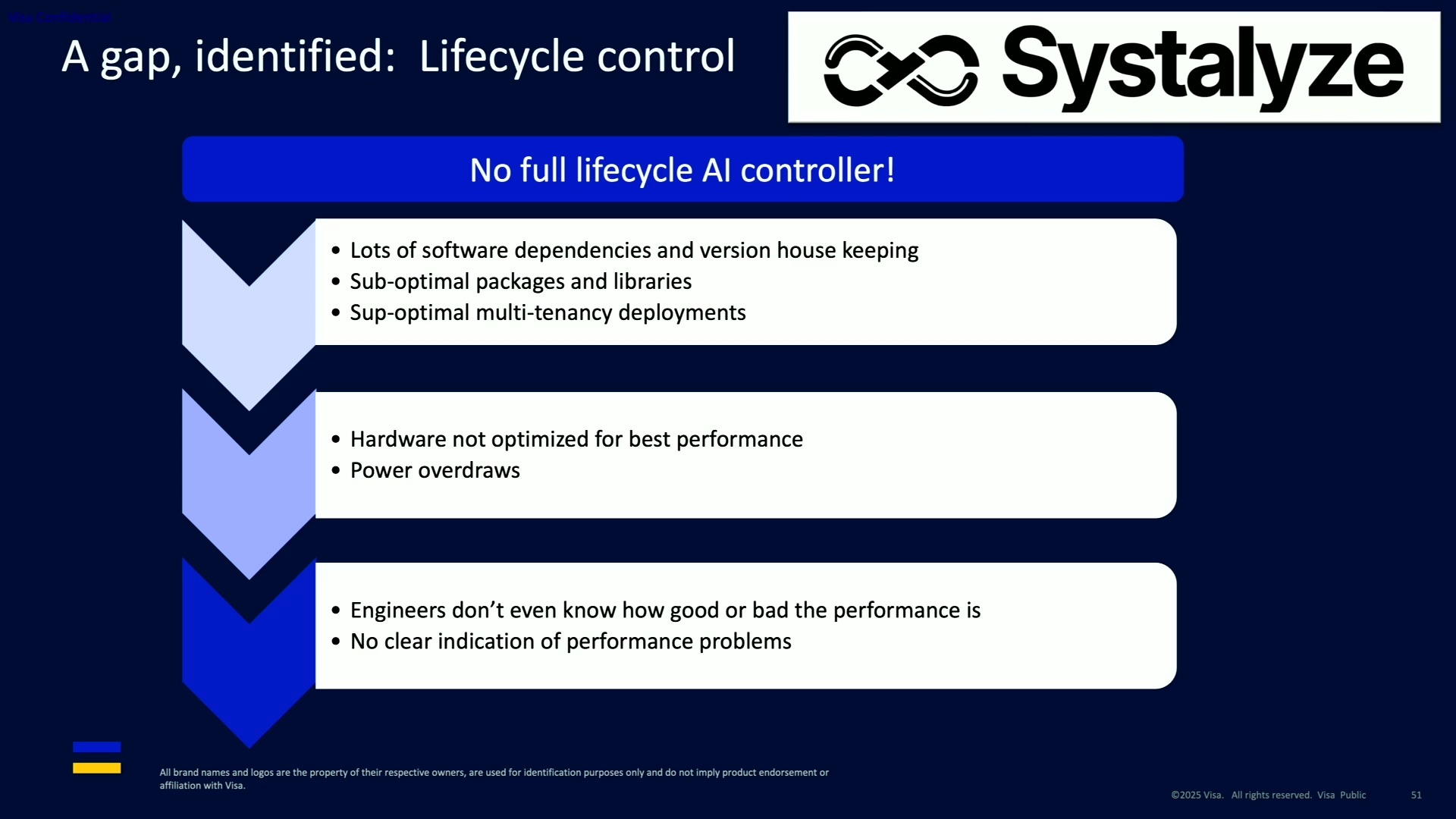

3. Performance Controller with Systalyze

The third innovation addresses the challenge of training foundation-scale models on Visa's unique transaction data. Unlike image or language models where the hyperparameter space is well understood, transaction foundation models represent uncharted territory.

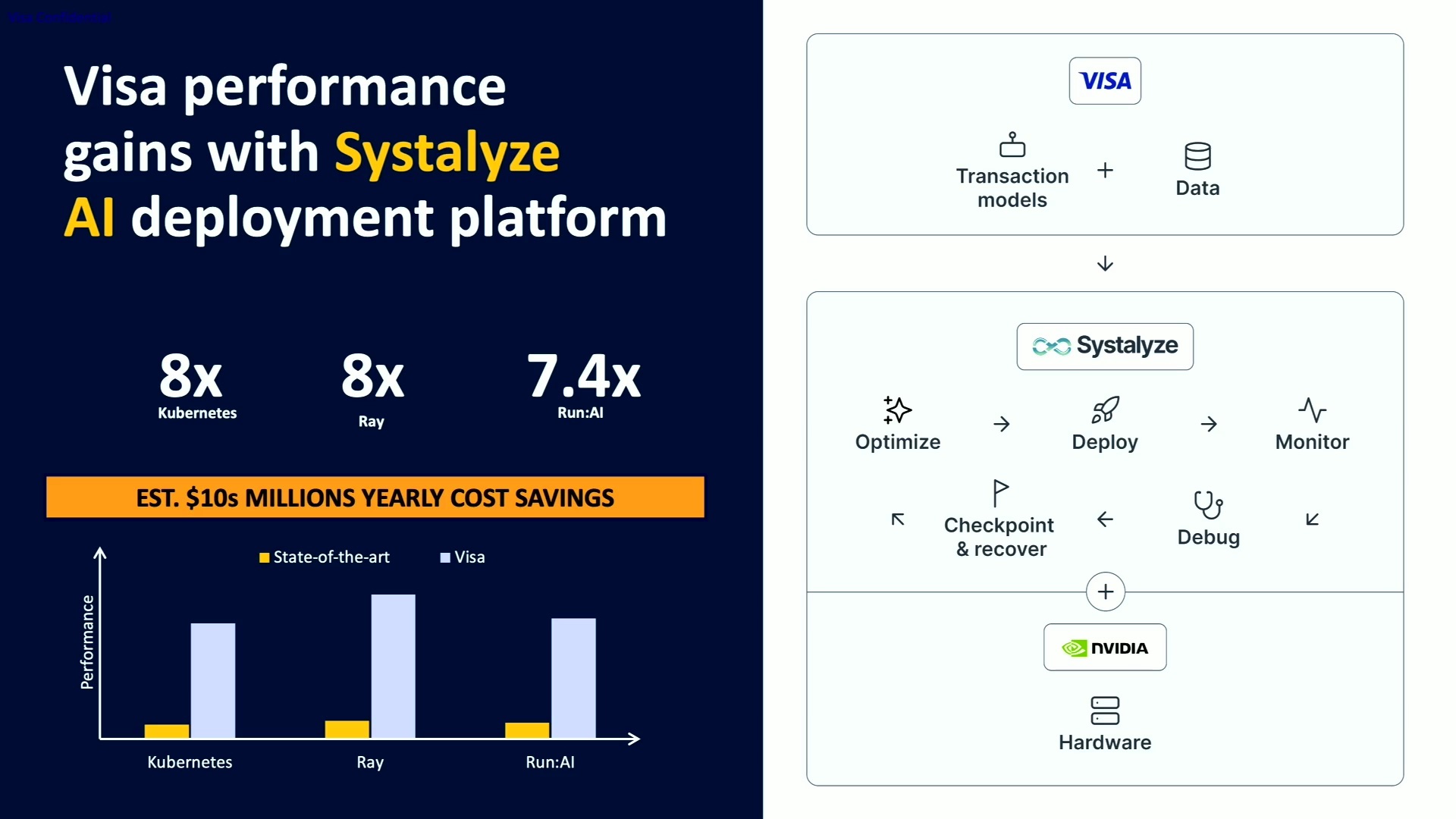

In collaboration with

MIT-founded startup Systalyze, Visa implemented a performance controller that:

- Manages the rapidly changing state-of-the-art software environment

- Helps keep hardware optimized for best performance

- Provides guidance on hyperparameter optimization

In a proof of concept, this solution delivered an 8x improvement in training iteration time for large language models compared to base Kubernetes on Q-bray. Over a year, this translates to millions of dollars in GPU hour savings.

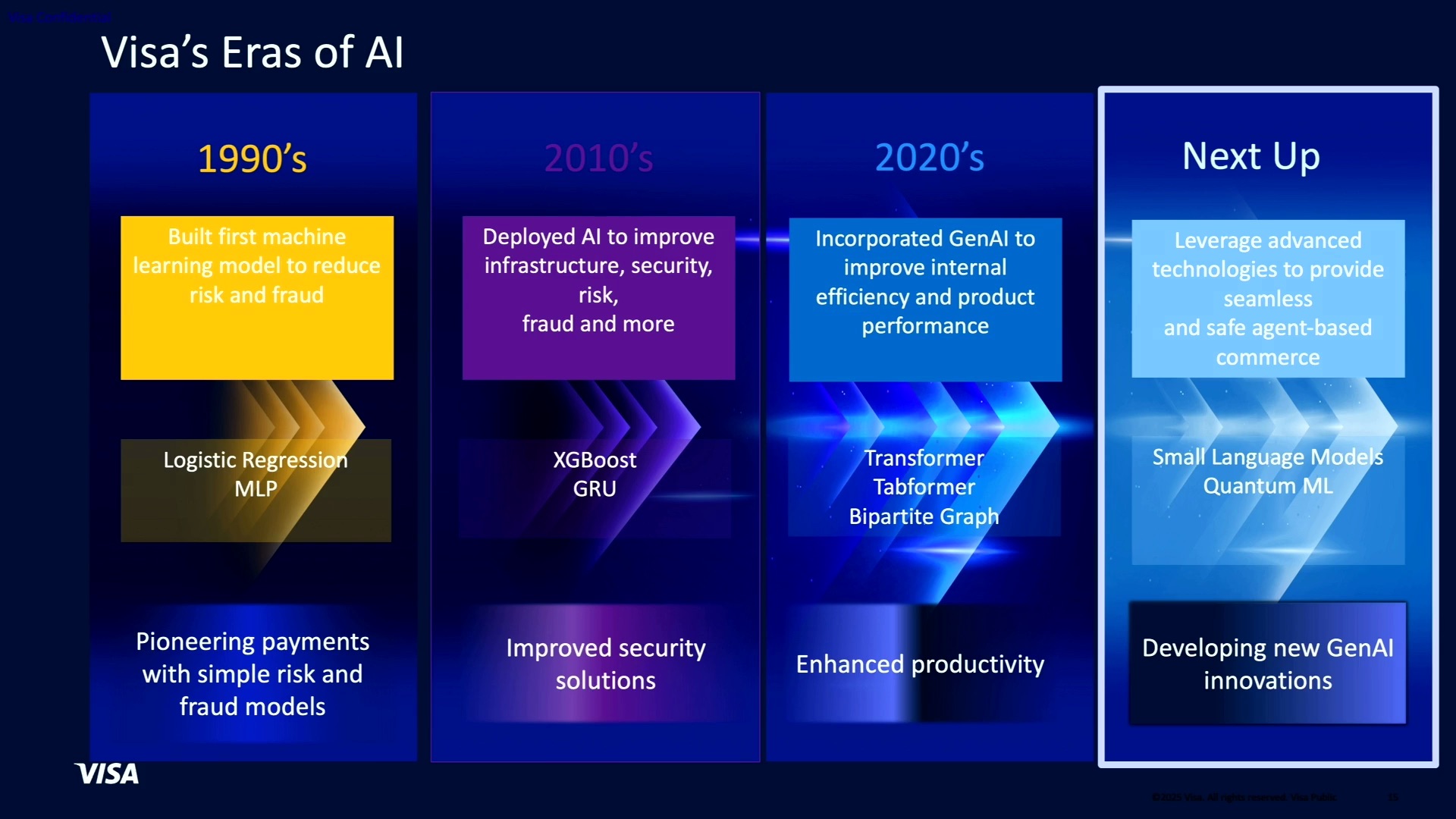

Visa's AI Journey

Visa's approach to AI has evolved through distinct eras:

- 1990s: Pioneering statistical and machine learning models for risk and fraud

- 2010s: Expanding into gradient boosting and deep learning (the "predictive AI era")

- 2020s: Early adoption of generative AI techniques

- Future: Already exploring small language models and quantum ML

The current generative AI era builds on Visa's unique data advantages. Even a few transactions can reveal significant patterns about consumer behavior. For example, knowing that someone bought premium coffee and concert tickets at midnight provides insights into their preferences and lifestyle.

By building foundation models on this transaction data, Visa can create personalized representations of cardholders without exposing their raw personal information - enabling better fraud detection and personalized services while maintaining privacy.

Visa's journey offers several important takeaways for organizations building AI infrastructure:

- Guide technology with principles: Visa's AI principles directly shape their infrastructure decisions.

- Innovate to maximize finite resources: When you can't easily add hardware, software innovation becomes essential.

- Eliminate friction between tools: Moving to a unified framework (Ray) reduced development time and complexity.

- Learn from missteps: Laszlo shared three approaches they've deprecated - automating gaps between tools instead of unifying tools, using Dask instead of Ray, and maintaining a GUI for feature engineering that constantly required backend updates.

- Balance on-premises and cloud: While keeping sensitive data on-premises, Visa is strategically exploring cloud options for less sensitive workloads.

Visa's experience demonstrates that even with hardware constraints and strict security requirements, thoughtful software innovation can unlock tremendous AI capabilities. As

Sarah Laszlo concluded, even infrastructure work should be guided by principles, and collaboration with open source, academic, and startup communities can help organizations overcome the unique challenges they face in the AI era.

Recent Posts