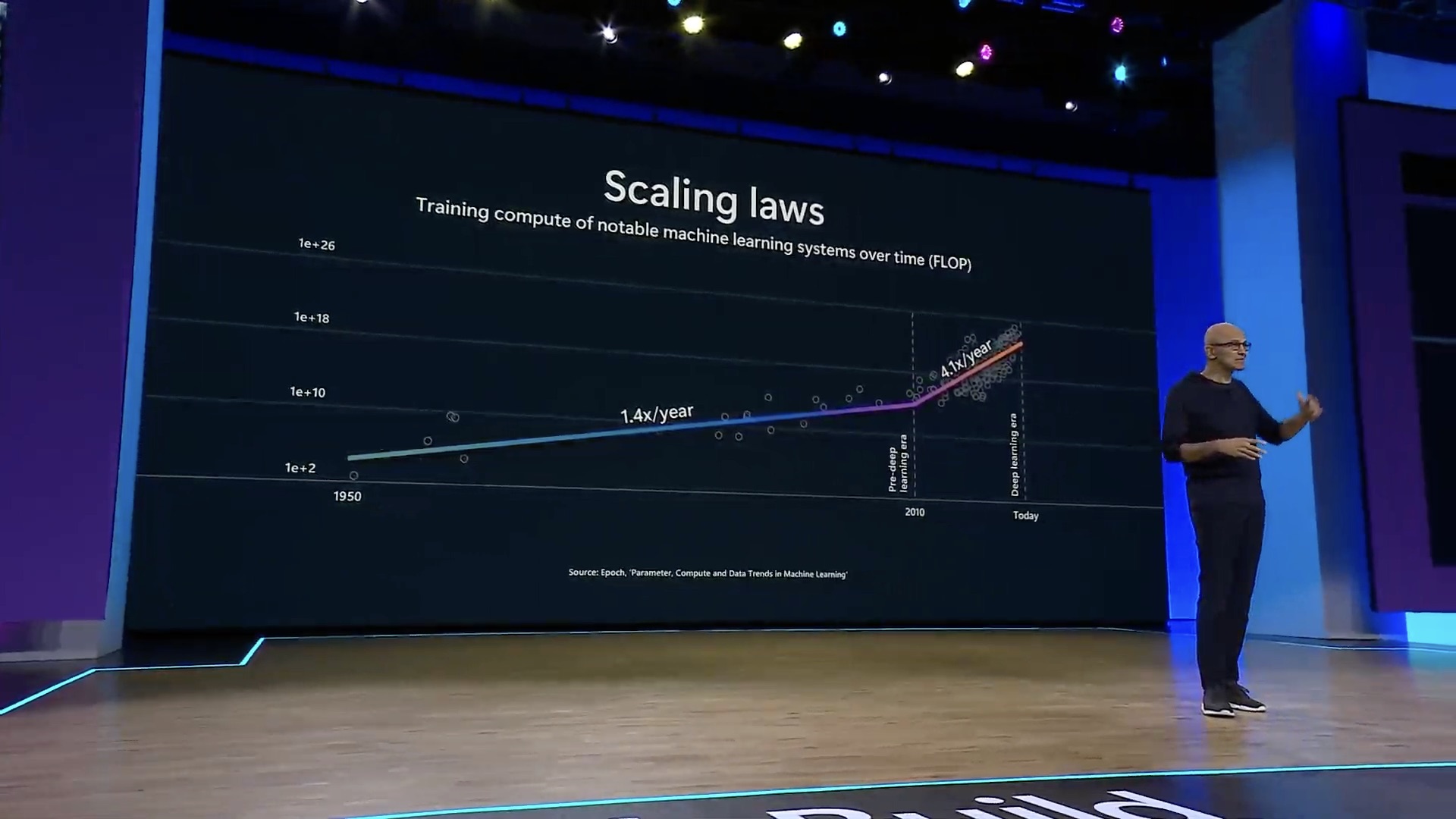

Updated: May 21 2024 17:55Microsoft has been making significant strides in the development of small language models (SLMs) in recent months. Following the launch of the cost-effective

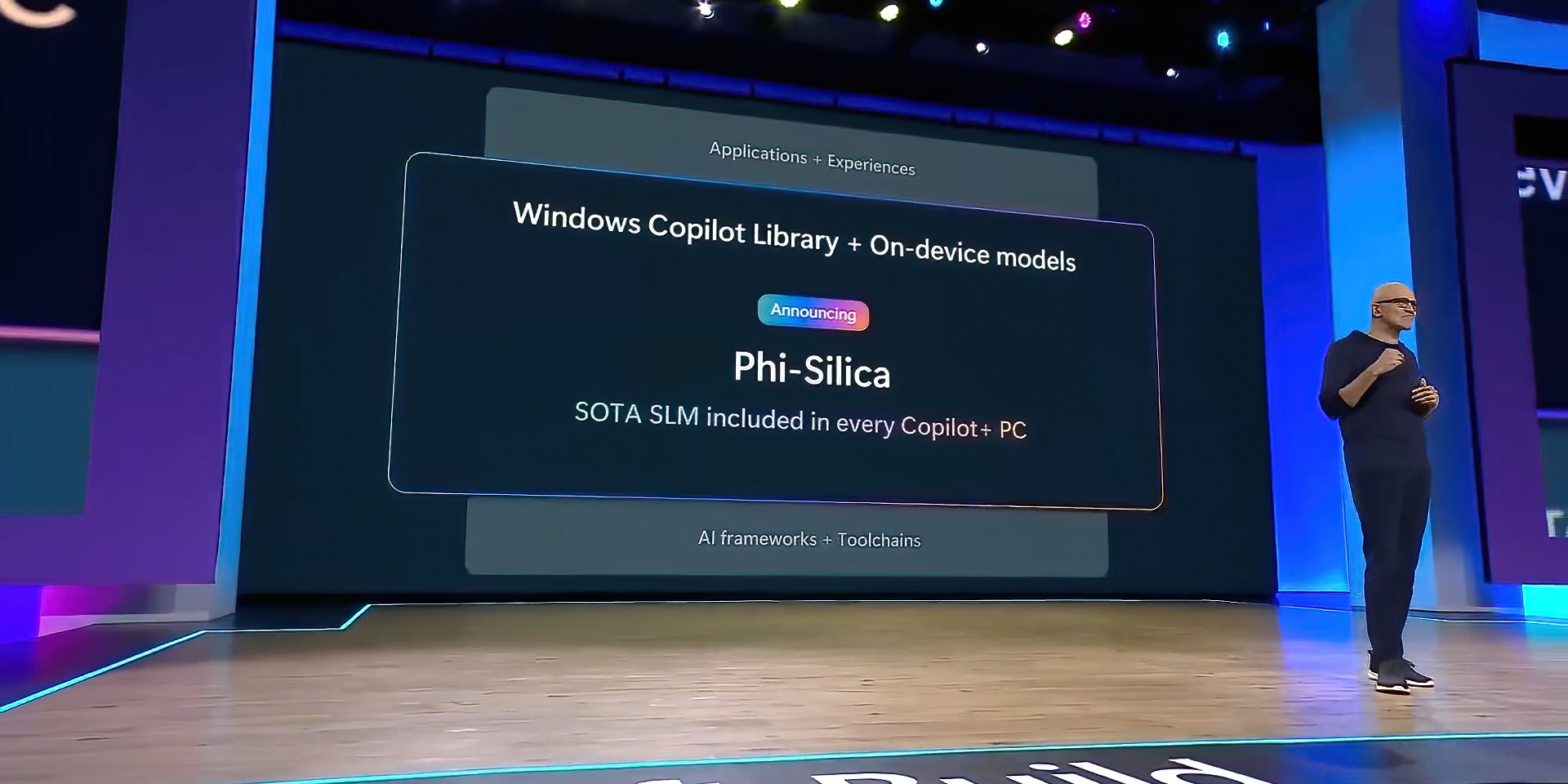

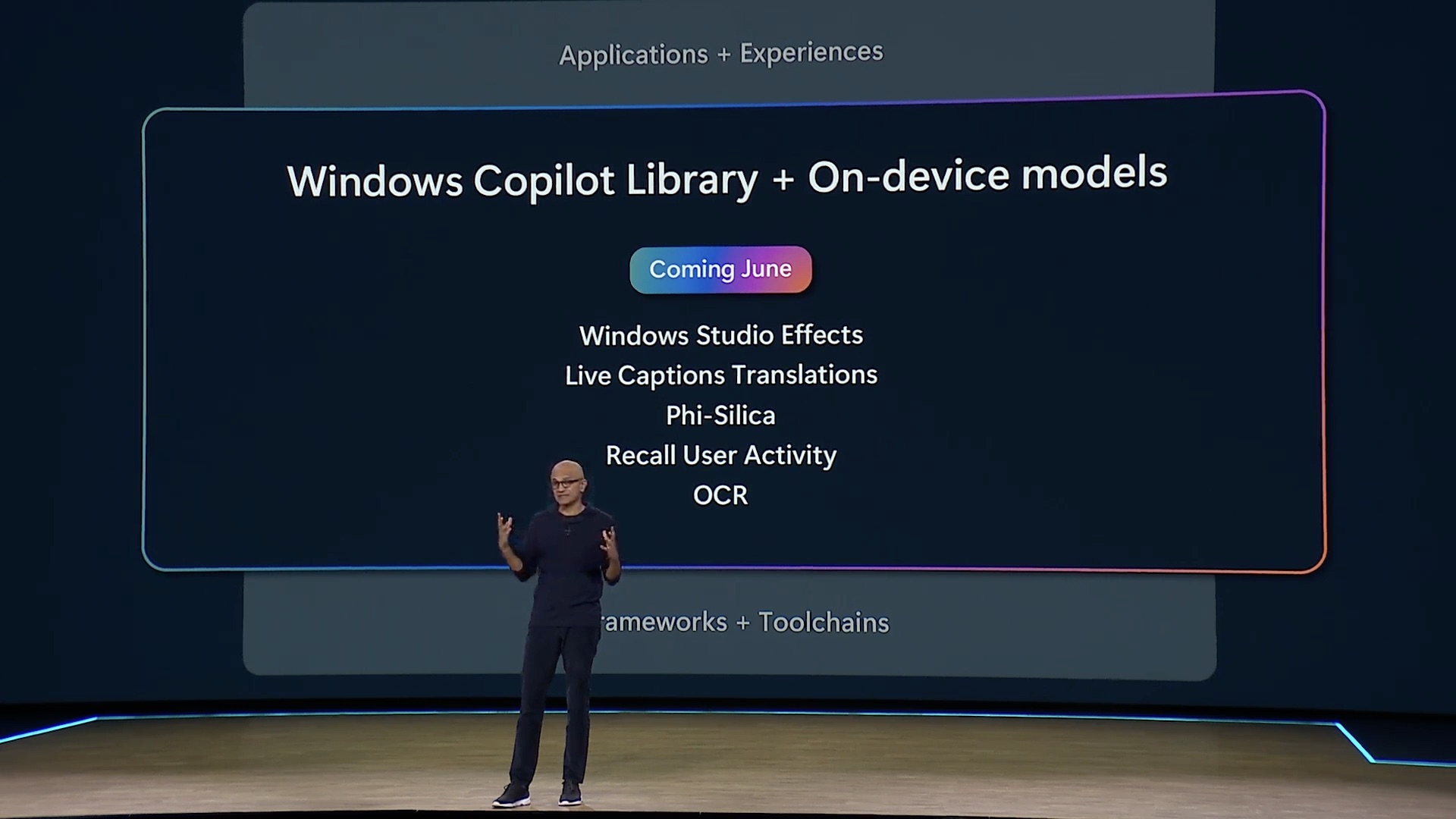

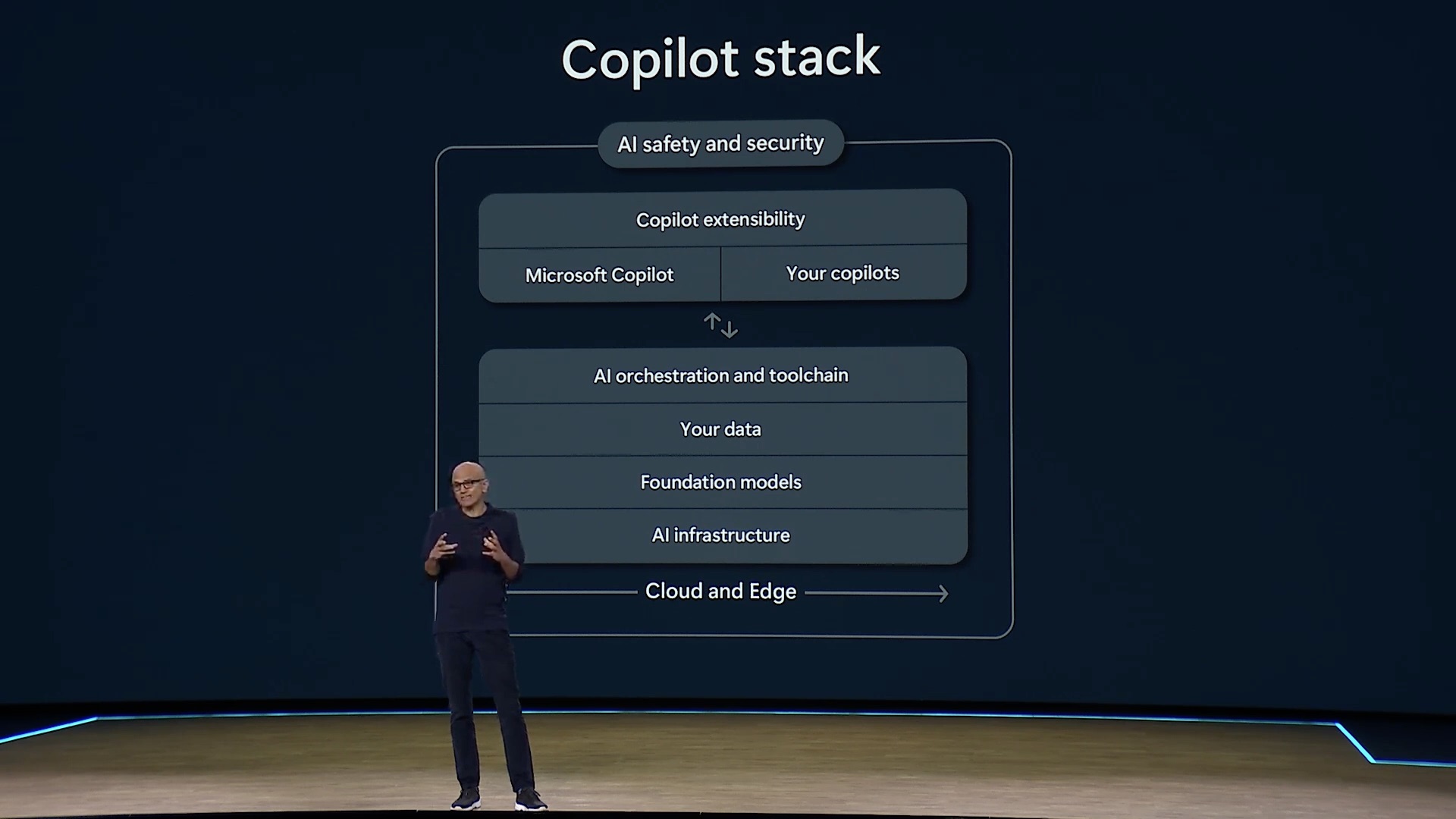

Phi-3 series, which outperformed competitors, the tech giant has now introduced a new addition to the family: Phi Silica. This custom-built model is designed specifically for the Neural Processing Units (NPUs) in Copilot+ PCs and is set to ship as part of future versions of Windows to power generative AI applications.

Phi-Silica versus OpenELM from Apple

Unveiled during the AI-focused Microsoft Build 2024 event, Phi-Silica promises to be even more cost-effective and power-friendly than its predecessors. By reusing the KV cache from the NPU and running on the CPU, this model can produce approximately 27 tokens per second. Microsoft boasts that with full NPU offload of prompt processing, the first token latency reaches an impressive 650 tokens per second while consuming only about 1.5 Watts of power. This efficiency leaves the CPU and GPU free to handle other computations, ensuring optimal performance.

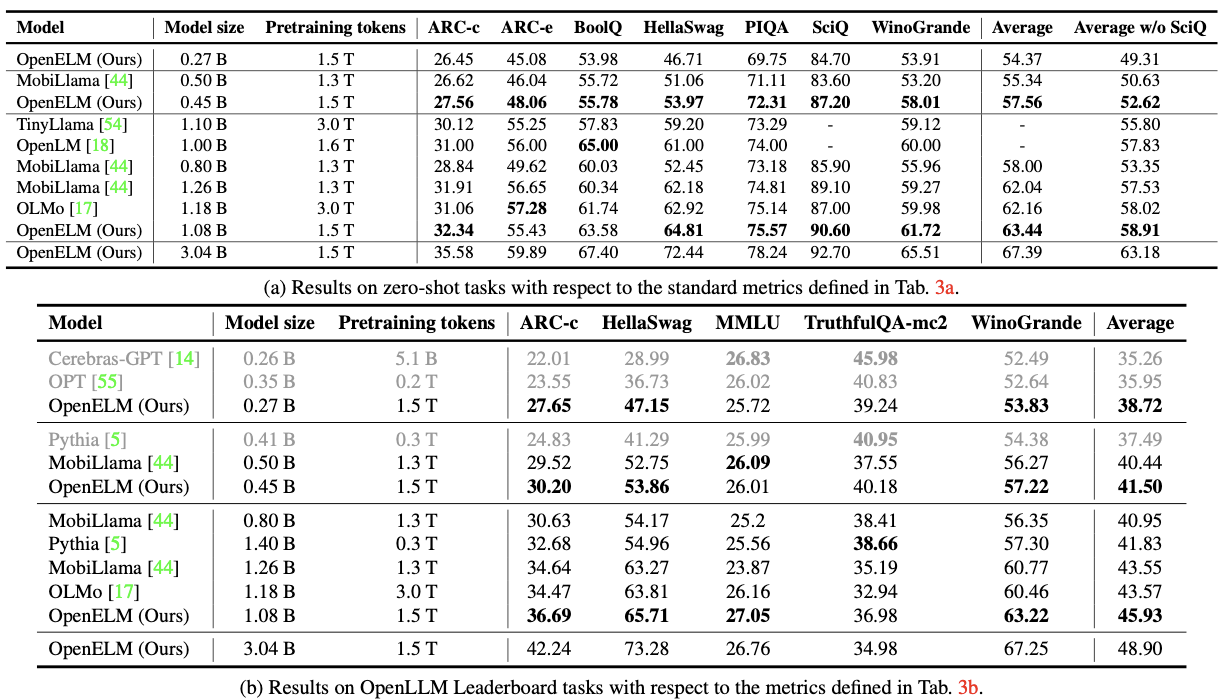

The arrival of Phi-Silica comes amidst a wave of small AI models being launched by various tech companies. Apple, Microsoft's primary competitor, recently introduced

OpenELM, offering different parameter options ranging from 270 million to 3 billion. The OpenELM models were pre-trained on a dataset of 1.8 trillion tokens and benchmarked on an Intel i9-13900KF CPU and an Apple MacBook Pro with an M2 Max system on-chip. While not the most powerful models, they perform well on some benchmarks, especially the 450 million parameter instruct variant.

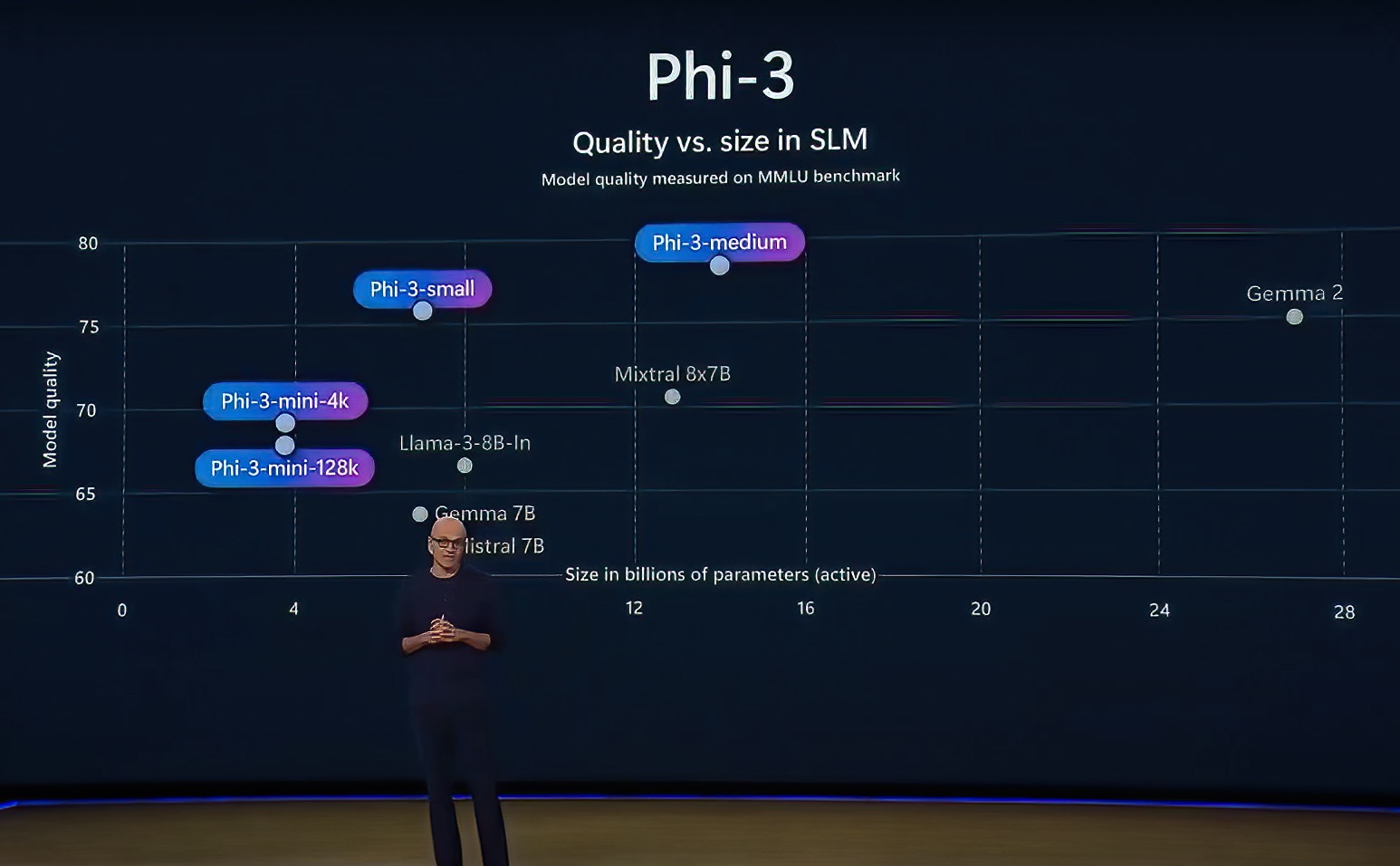

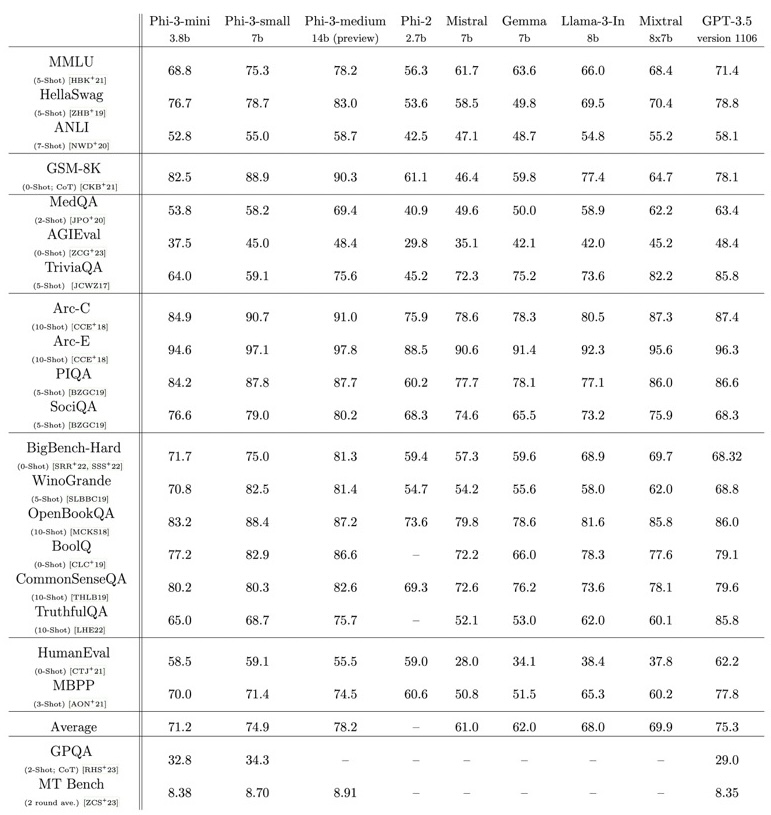

In comparison, the Phi-3 family initially arrived in three variants: phi-3-mini (3.8B parameters), phi-3-small (7B), and phi-3-medium (14B). The mini version was trained using Nvidia's AI-friendly H100 GPUs. Phi-3-mini is trained on 3.3 trillion tokens. Despite its small size, it’s performance is on par with models such as Mixtral 8x7B and ChatGPT (GPT-3.5). The model achieves 69% on MMLU and 8.38 on MT-bench, and is small enough to be deployed on a phone. The innovation lies entirely in the dataset for training, a scaled-up version of the one used for phi-2, composed of heavily filtered web data and synthetic data.

Microsoft has emphasized the superior performance of the Phi-3 models, stating that they "significantly outperform language models of the same and larger sizes on key benchmarks." The company claims that Phi-3-mini surpasses models twice its size, while Phi-3-small and Phi-3-medium outshine even larger models, including GPT-3.5.

phi-3-mini Benchmark Results Comparison

Here are the results for phi-3-mini on standard open-source benchmarks measuring the model’s reasoning ability (both common sense reasoning and logical reasoning). The table includes comparison with phi-2 [JBA+23], Mistral-7b-v0.1 [JSM+23], Mixtral-8x7b [JSR+24], Gemma 7B [TMH+24], Llama-3-instruct- 8b [AI23], and GPT-3.5. All the reported numbers are produced with the exact same pipeline to ensure that the numbers are comparable.

Apple OpenELM Performance and Results

OpenELM demonstrates impressive performance across various benchmarks. In zero-shot settings, it surpasses existing open-source LLMs of comparable size, showcasing its efficient architecture. Additionally, OpenELM shows strong results in few-shot scenarios and benefits from instruction tuning, further improving its capabilities.

Apple chose MobiLlama and OLMo as baselines because they are pre-trained on public datasets using a similar or larger number of tokens. Apple evaluated OpenELM, MobiLlama, and OLMo using the same LM evaluation harness version. Results for other models are taken from their official GitHub repositories and the OpenLLM leaderboard. Best task accuracy for each model category is highlighted in bold. Models pre-trained with less data are highlighted in gray color.

Empowering Developers and Enhancing User Experiences

The introduction of Phi-Silica marks a pivotal moment in bringing advanced AI directly to third-party developers optimized for Windows. With this model embedded in all Copilot+ PCs starting from June, developers will have the opportunity to build incredible first-party and third-party experiences that will elevate productivity and accessibility within the Windows ecosystem.

With Phi-Silica joining the ranks of Phi-3-mini, Phi-3-small, Phi-3-medium, and Phi-3-vision, Microsoft continues to push the boundaries of what is possible with small language models. As Microsoft continues to invest in the development of SLMs and integrate them into its products, users can expect to see a new era of productivity and innovation.

The arrival of Phi-Silica in Copilot+ PCs this fall marks an exciting step forward in bringing the power of generative AI to the masses, and it will be fascinating to witness the incredible applications that developers create using this groundbreaking technology.

Recent Posts