AI Summary

Apple has released OpenELM, an open-source AI model designed to run directly on devices, consisting of eight models with varying parameter sizes, trained on publicly available datasets and benchmarked on Intel hardware, showcasing its efficient architecture and performance gains, aiming to democratize AI development and push the boundaries of on-device AI capabilities.

Apple, a company known for its walled garden approach, has made a surprising move by releasing OpenElm, a collection of small, open-source AI models designed to run directly on devices. OpenELM consists of eight models, ranging from 270 million to 3 billion parameters, designed to run on devices rather than needing cloud servers. Apple states that the models are for on-device applications and are suited for commodity laptops and even some smartphones.

The models were pre-trained on a dataset of 1.8 trillion tokens and benchmarked on an Intel i9-13900KF CPU and an Apple MacBook Pro with an M2 Max system on-chip. While not the most powerful models, they perform well on some benchmarks, especially the 450 million parameter instruct variant. In the long term, OpenELM is expected to improve as the community adopts it for various applications.

This development has sent ripples through the tech world and it is likely we will see these models deployed in future iPhone devices. This move aligns Apple with other tech giants like Google, Samsung, and Microsoft, who have been actively exploring generative AI for PCs and mobile devices. Microsoft just released the new language model,

phi-3-mini, which is a 3.8 billion parameter model trained on a whopping 3.3 trillion tokens. Despite its small size, it’s performance is on par with models such as Mixtral 8x7B and ChatGPT (GPT-3.5).

What Makes OpenELM Stand Out?

- Open-Source Everything: Unlike many existing LLMs that only release model weights or limited code, OpenELM provides the entire training and evaluation framework. This includes training logs, checkpoints, configurations, and even code for running the model on Apple devices.

- Efficient Architecture: OpenELM utilizes a "layer-wise scaling" approach, optimizing parameter allocation within each transformer layer. This allows the model to achieve better accuracy with fewer parameters compared to other open-source counterparts like OLMo.

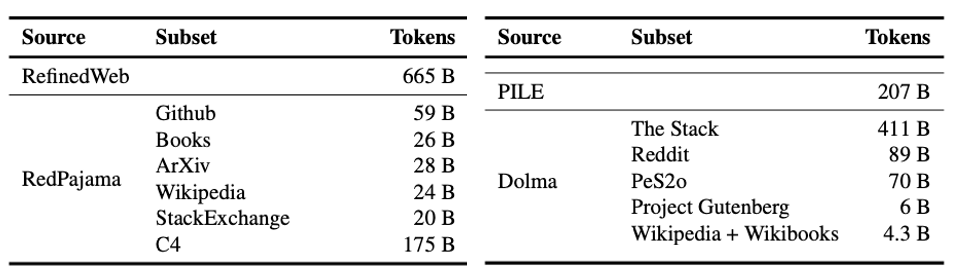

- Public Datasets: OpenELM is trained on a combination of publicly available datasets, including RefinedWeb, PILE, RedPajama, and Dolma. This ensures that the data used for training is accessible and allows researchers to scrutinize potential biases.

8 Models Available Now on HuggingFace

Apple is releasing OpenELM suite consists of eight models in total on Huggingface, four pre-trained and four instruction-tuned, with parameter sizes ranging from 270 million to 3 billion.

Pre-training is essential for large language models (LLMs) to generate coherent and potentially useful text. However, pre-training alone may not always result in the most relevant outputs for specific user requests. This is where instruction tuning comes into play. By fine-tuning the models with specific instructions, LLMs can provide more accurate and targeted responses to user prompts.

Apple has made the weights of its OpenELM models available under a "sample code license," along with various checkpoints from training, performance statistics, and instructions for pre-training, evaluation, instruction tuning, and parameter-efficient fine-tuning. This license allows for commercial usage and modification, with the only requirement being the retention of the notice, text, and disclaimers when redistributing the software in its entirety and without modifications.

It is important to note that Apple has released these models without any safety guarantees, acknowledging the possibility of inaccurate, harmful, biased, or objectionable outputs in response to user prompts. This transparency is crucial for developers and researchers who plan to work with these models.

Performance and Results

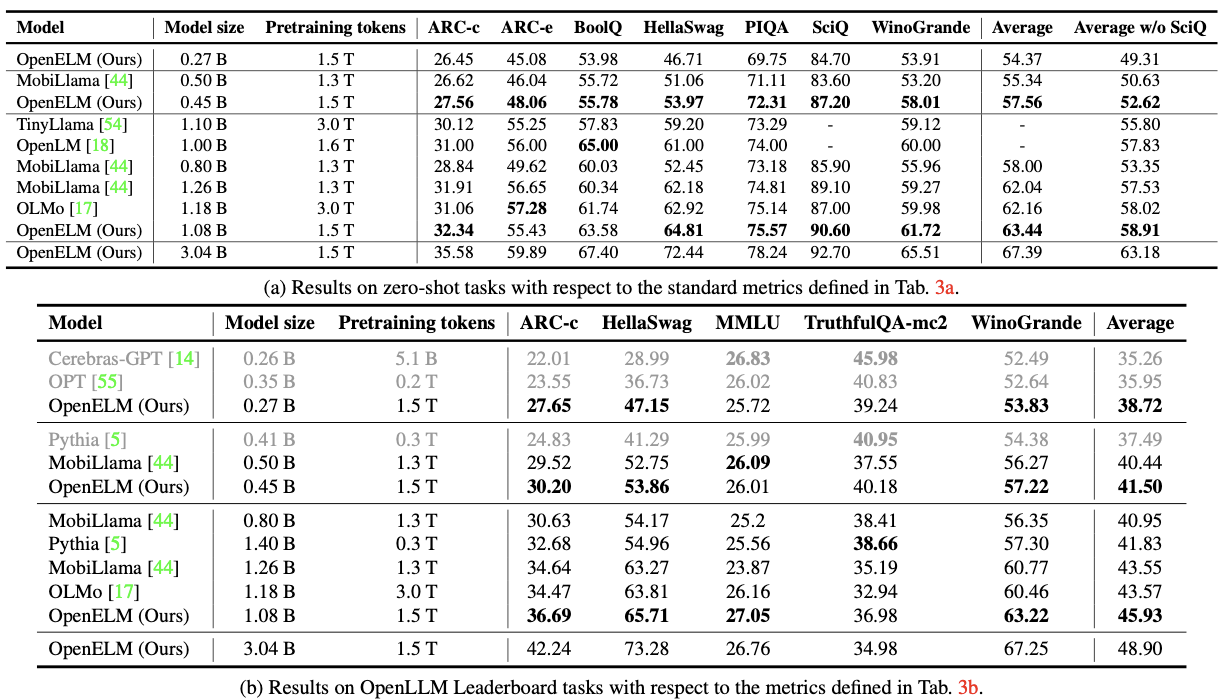

OpenELM demonstrates impressive performance across various benchmarks. In zero-shot settings, it surpasses existing open-source LLMs of comparable size, showcasing its efficient architecture. Additionally, OpenELM shows strong results in few-shot scenarios and benefits from instruction tuning, further improving its capabilities.

Apple chose MobiLlama and OLMo as baselines because they are pre-trained on public datasets using a similar or larger number of tokens. Apple evaluated OpenELM, MobiLlama, and OLMo using the same LM evaluation harness version. Results for other models are taken from their official GitHub repositories and the OpenLLM leaderboard. Best task accuracy for each model category is highlighted in bold. Models pre-trained with less data are highlighted in gray color.

Apple benchmark on modern, consumer-grade hardware with BFloat16 as the data type. Specifically, CUDA benchmarks were performed on a workstation with an Intel i9-13900KF CPU, equipped with 64 GB of DDR5- 4000 DRAM, and an NVIDIA RTX 4090 GPU with 24 GB of VRAM, running Ubuntu 22.04. PyTorch v2.2.2 was used, with the most recent versions of models and the associated libraries.

Apple also benchmark OpenELM models on the Apple silicon, running it on an Apple MacBook Pro with an M2 Max system-on-chip and 64GB of RAM, running macOS 14.4.1.

Apple's Growing Interest in Open-Source AI

The release of OpenELM follows Apple's previous open-source AI model, Ferret, which made headlines in October for its multimodal capabilities. These releases mark a significant shift in Apple's approach to AI, as the company has traditionally been known for its closed and secretive nature.

By embracing open-source AI models, Apple is not only contributing to the broader AI community but also demonstrating its commitment to advancing the field. This move may also attract more developers and researchers to explore and build upon Apple's AI technologies, potentially leading to new innovations and applications.

- Democratizing AI: By open-sourcing these models, Apple makes powerful AI tools accessible to a wider range of developers, potentially leading to a surge in innovative applications.

- Challenging the Status Quo: OpenElm is a direct challenge to the current trend of cloud-based AI, offering an alternative that prioritizes privacy and efficiency.

- Pushing the Boundaries of On-Device AI: OpenElm demonstrates the increasing capabilities of on-device machine learning, paving the way for more sophisticated AI features on our personal devices.

Apple Commitment to Open Research

Apple's commitment to open-source principles has significant implications for the LLM landscape. By providing full transparency into the training and evaluation process, it fosters trust and encourages further research in the field. This accessibility can empower researchers to explore model biases, safety, and ethical considerations more effectively.

Diverging from prior practices that only provide model weights and inference code, and pre-train on private datasets, Apple has gone above and beyond. The release includes the complete framework for training and evaluation of the language model on publicly available datasets, including training logs, multiple checkpoints, and pre-training configurations. Furthermore, Apple has released code to convert models to the MLX library for inference and fine-tuning on Apple devices, making it easier for researchers and developers to leverage OpenELM in their work.

The Future of OpenElm and On-Device AI

OpenELM represents a significant step forward in the realm of open language models. With its layer-wise scaling approach, impressive performance gains, and comprehensive release, OpenELM sets a new standard for open research in natural language processing. By empowering the open research community with access to state-of-the-art models and a complete framework for training and evaluation, Apple has paved the way for future open research endeavors.

It's exciting to imagine the possibilities that OpenElm unlocks. We can expect to see a surge in apps utilizing on-device AI for a more personalized, private, and efficient user experience. Imagine health apps that can analyze your movements with greater accuracy, educational tools that adapt to your learning style in real-time, or creative apps that respond instantly to your gestures.

Apple's entry into the open-source AI space is a game-changer. OpenElm is not just a collection of tools, but a statement about the future of AI, one where privacy, efficiency, and user empowerment are at the forefront. And with its impressive performance benchmarks, OpenElm is setting a new standard for on-device AI capabilities.

Full Report: OpenELM: An Efficient Language Model Family with Open-source Training and Inference Framework

Recent Posts