AI Summary

At COMPUTEX 2025, ARM's Chris Bergey declared that the current shift to AI computing is a transformation "bigger than the internet" and "bigger than smartphones," emphasizing the critical role of power efficiency. He highlighted ARM's significant penetration in data centers, with over 50% of new AWS CPU capacity being ARM-based, and its dominance in mobile computing. ARM's strategy focuses on democratizing AI across all devices, optimizing for AI inference—the primary business opportunity—and developing next-generation hardware like the Travis CPU and Draga GPU.

Standing on stage at COMPUTEX 2025 in Taipei, Chris Bergey painted a picture of a computing world on the cusp of its most significant transformation since the internet. As ARM's Senior Vice President and General Manager of Client Business, Bergey didn't mince words: "This is bigger than the internet. This is bigger than smartphones."

The bold declaration came as part of ARM's comprehensive vision for artificial intelligence computing, one that spans from massive data centers to the devices in our pockets. But unlike the typical tech conference hyperbole, ARM backed up its claims with concrete achievements and a roadmap that could reshape how we think about computing power.

The Data Center Surprise: ARM's Quiet Conquest

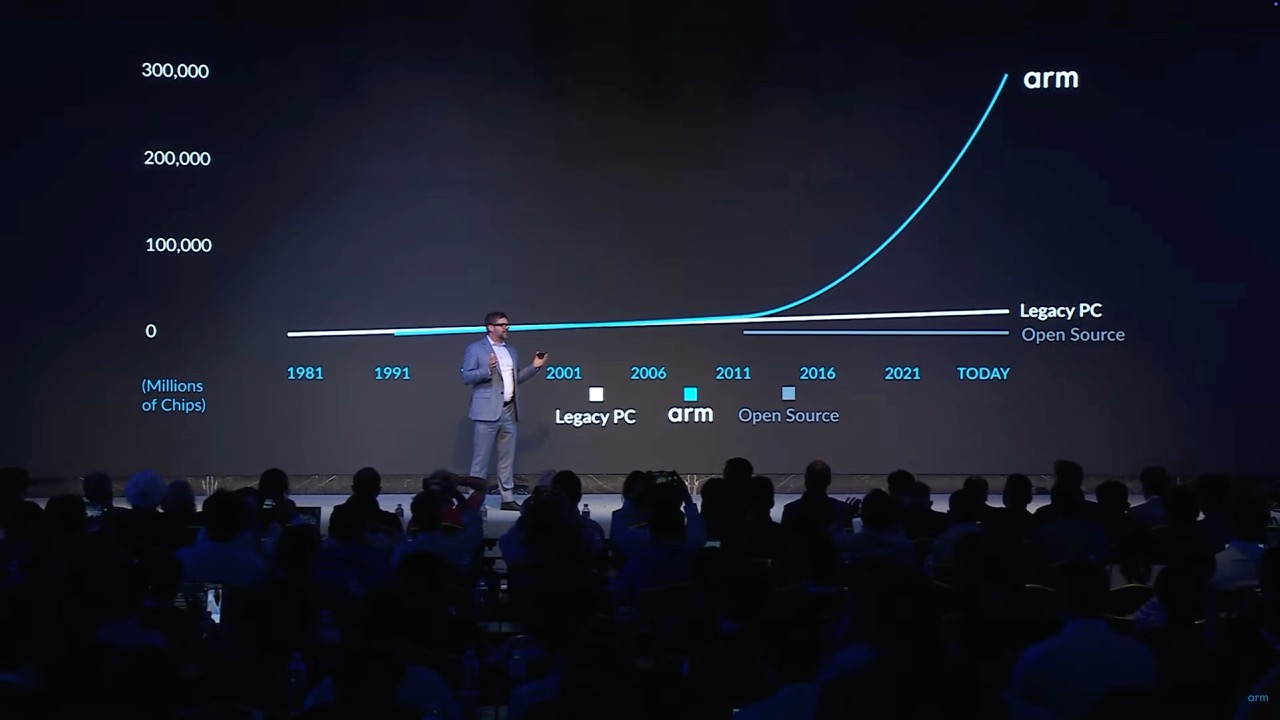

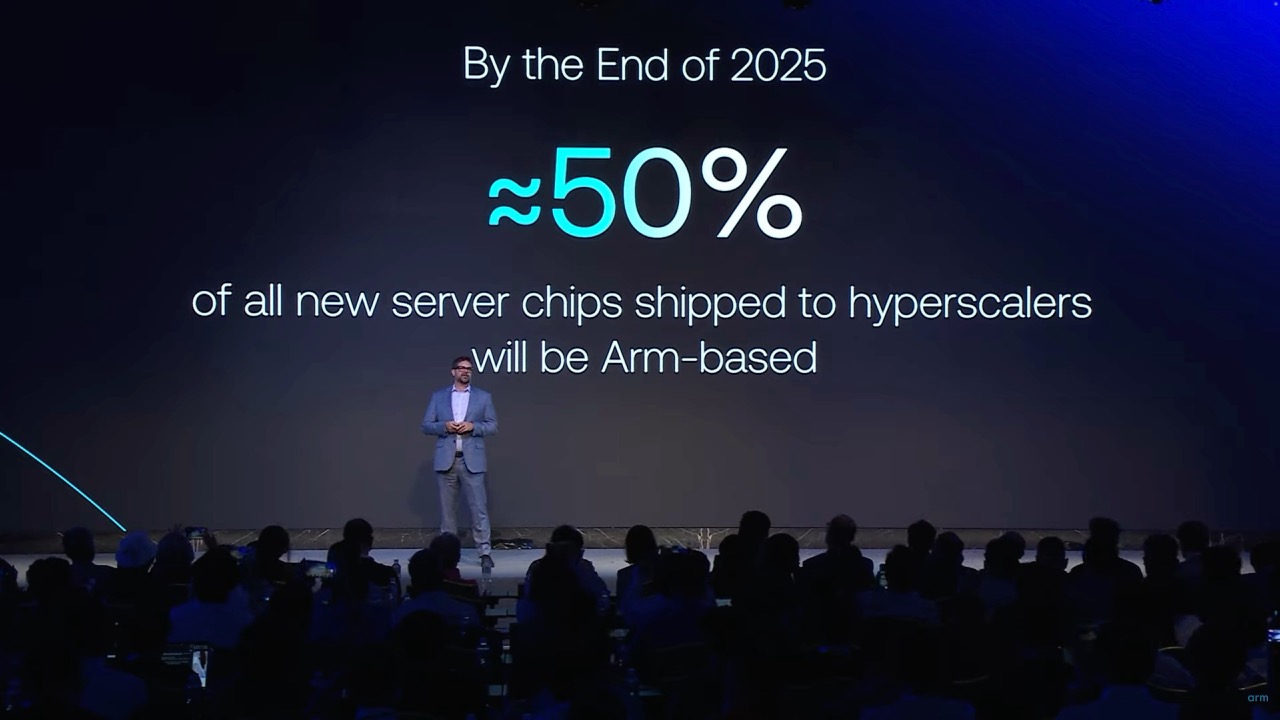

Perhaps the most striking revelation from Bergey's presentation was just how deeply ARM has penetrated the data center market. While many still think of ARM as primarily a mobile chip architecture, the numbers tell a different story.

Over 50% of new CPU capacity deployed by Amazon Web Services in the last two years was ARM-based, according to AWS's own statistics shared at their re:Invent conference.

This isn't just Amazon playing favorites with ARM. Over 90% of AWS's thousand largest EC2 customers are running workloads on ARM-powered Graviton processors. The appeal is clear: up to 40% better power efficiency compared to traditional x86 architectures.

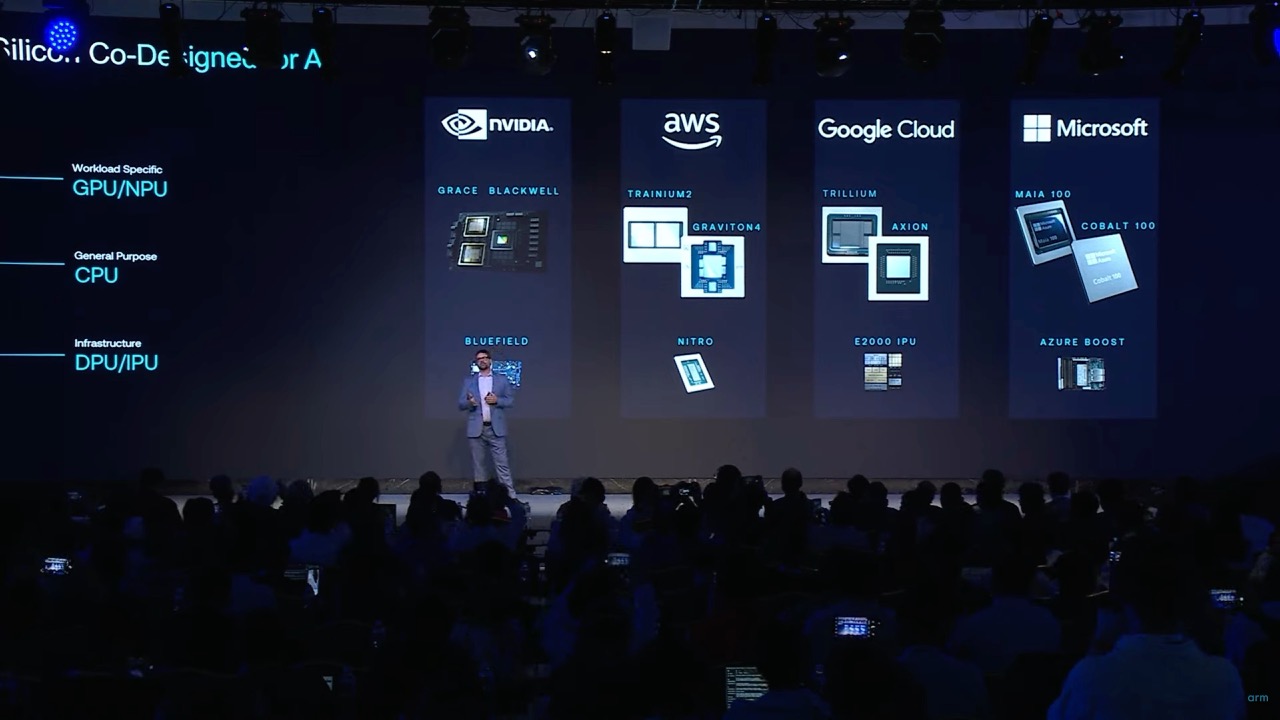

The trend extends beyond Amazon. Every major cloud provider now offers ARM-based options, from Microsoft's Cobalt processors to Google's Axion chips. Even Oracle and Alibaba have ARM partnerships in place.

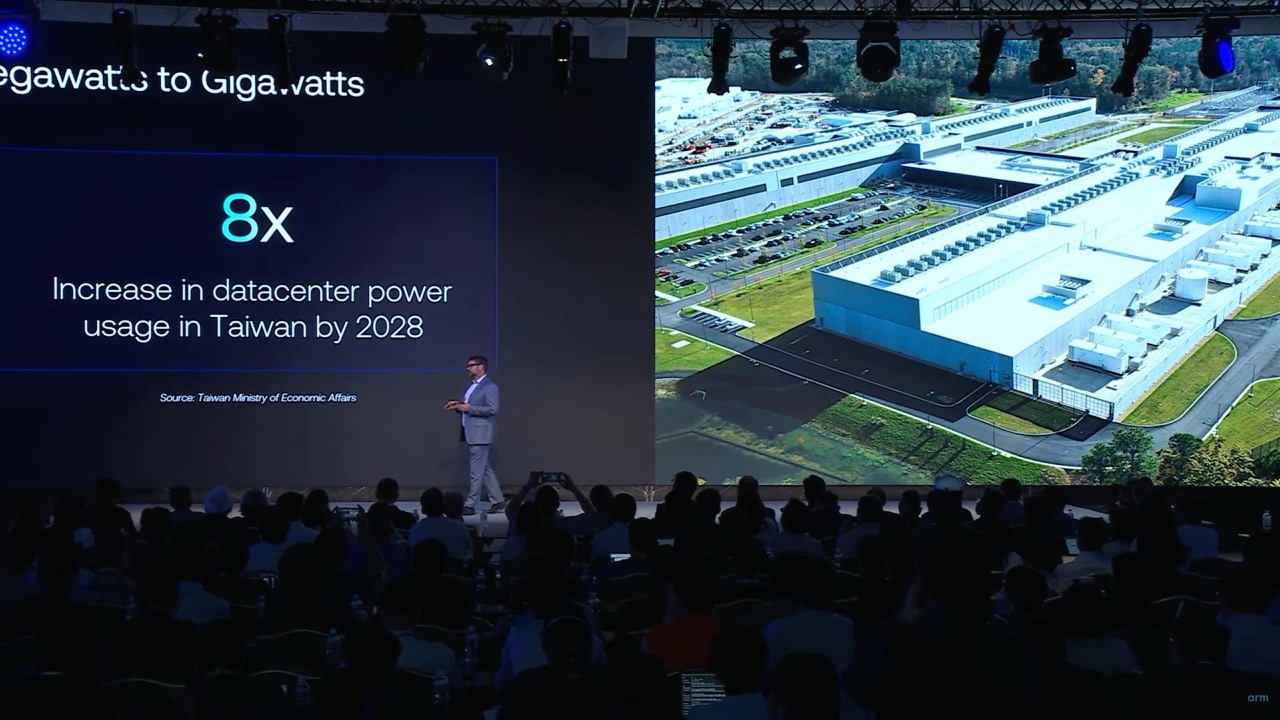

Bergey spent considerable time addressing what might be the defining challenge of the AI era: power consumption. The statistics he shared were sobering. Taiwan alone expects an 8x increase in data center usage, moving from megawatts to gigawatts of power consumption.

The problem isn't just about adding more servers. More than 50% of a data center's power goes directly to the computing racks and semiconductors. As AI workloads demand exponentially more processing power, the industry faces a fundamental choice: find more efficient ways to compute, or watch infrastructure costs spiral out of control.

The simple truth is that we can talk about how wonderful AI is going to be and all the opportunity, but if we don't solve for efficiency, AI won't scale

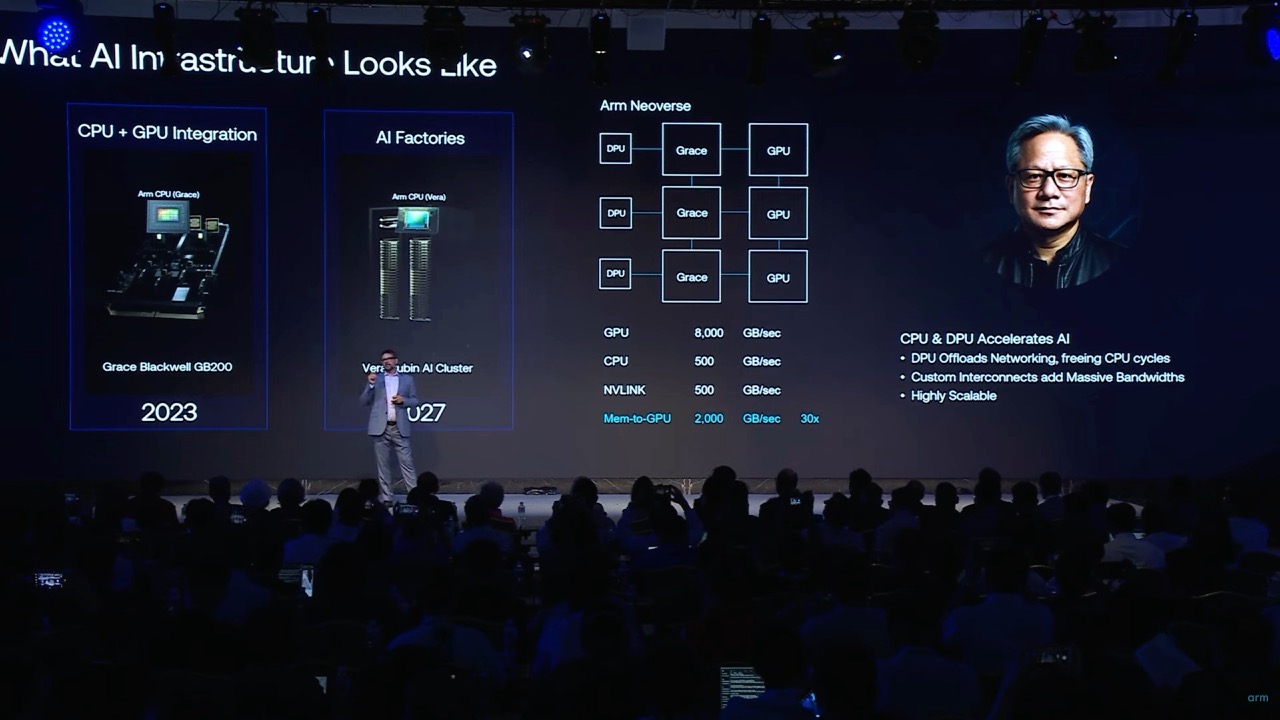

This efficiency challenge has driven some of the industry's biggest players to rethink their approach entirely. NVIDIA, traditionally reliant on commodity x86 servers, has moved to custom ARM-based solutions. Their latest Grace Blackwell systems represent a 25x improvement over previous architectures by tightly coupling ARM CPUs with specialized AI accelerators.

From Cloud Giants to Smartphones: ARM's Ubiquitous Strategy

ARM's strategy isn't just about conquering data centers. The company is leveraging its dominant position in mobile computing to create what Bergey calls "the most ubiquitous computing platform in history." With over 310 billion ARM-based chips shipped to date, the company has built an ecosystem that touches more devices and developers than any other platform.

This scale creates a virtuous cycle. More hardware running ARM architecture means more software optimized for ARM, which in turn drives demand for more ARM-based hardware. The company now claims over 22 million developers are writing software for ARM platforms.

The mobile revolution provides a template for ARM's AI ambitions. Just as smartphones transformed from luxury items to essential tools available globally, ARM sees AI following a similar democratization path. The key is making AI capabilities available across the full spectrum of devices, from high-end workstations to budget smartphones.

The Inference Revolution: Where the Money Really Is

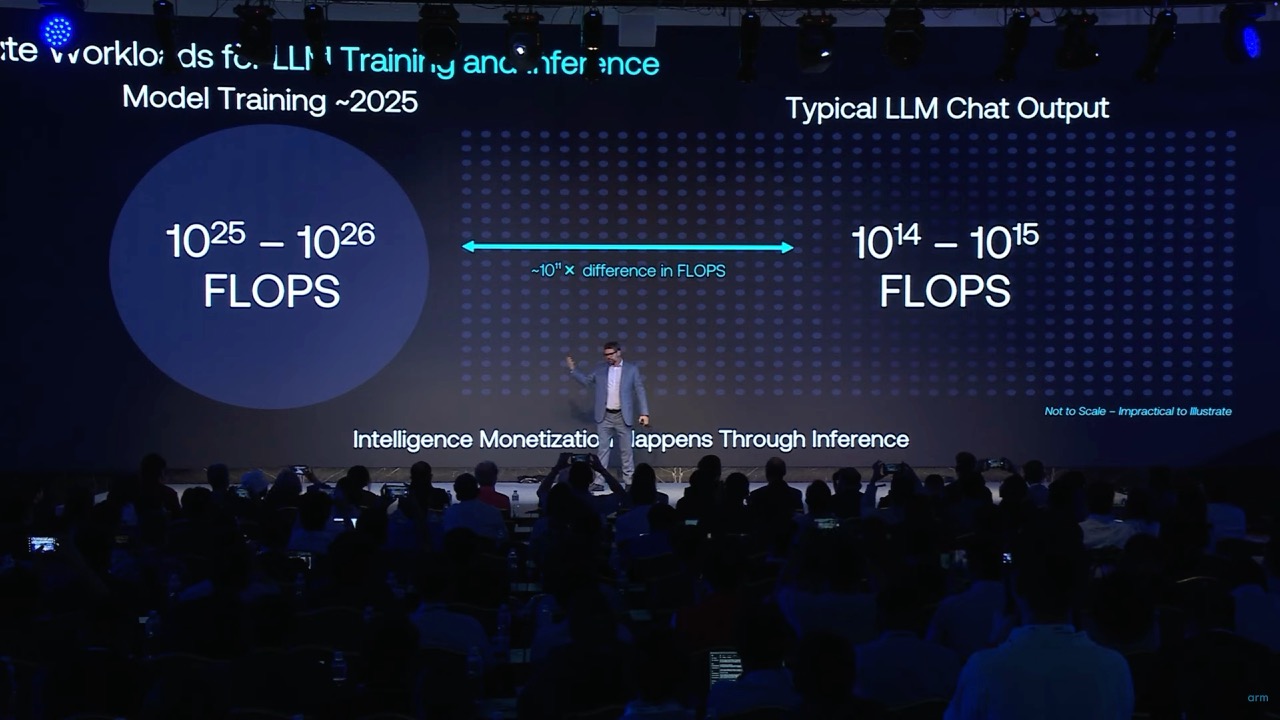

While much attention focuses on training large AI models, Bergey highlighted a crucial distinction that many overlook: the real business opportunity lies in AI inference, not training. The math is striking.

Training a foundation model requires approximately 10^25 to 10^26 floating-point operations. Running inference on that same model for a single query takes about 10^14 to 10^15 operations. That's a difference of 10^11, or about 100 billion operations.

To put this in perspective, Bergey noted that the total number of internet searches performed worldwide in a single day is roughly 10^10. This means it would take ten days of continuous model usage to equal the computational cost of training that model initially.

This dramatic difference explains why inference, not training, represents the primary revenue opportunity for most companies. It also explains why optimizing for inference efficiency has become so critical for the industry's economic sustainability.

Next-Generation Hardware: Travis and Draga

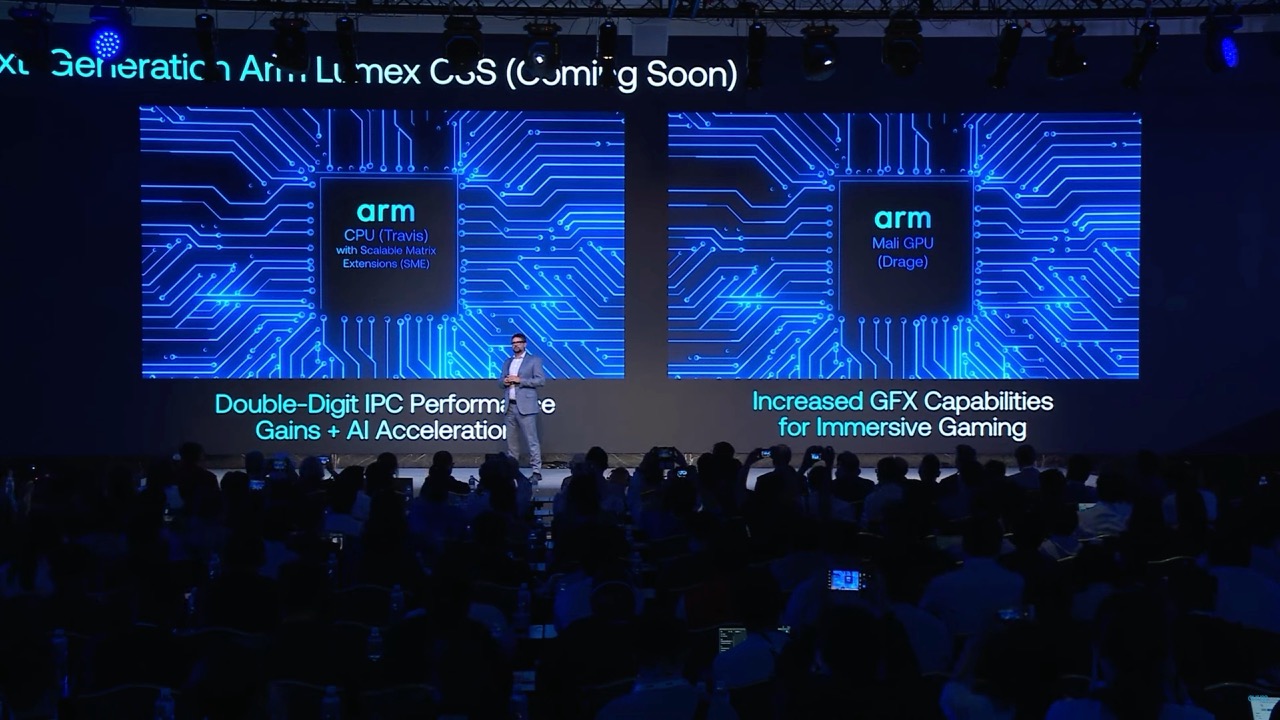

ARM isn't content to rest on its current success. Bergey provided a preview of the company's next-generation processor designs, starting with Travis, the successor to ARM's V9 architecture.

Travis promises significant improvements across multiple dimensions:

- Double-digit gains in instructions per clock cycle (IPC), building on ARM's already industry-leading performance

- Scalable Matrix Extensions (SME) for accelerating AI workloads directly in the CPU

- Common instruction set support for seamless migration across different ARM-based systems

- Native support for ARM's Kleidi AI libraries for optimized performance

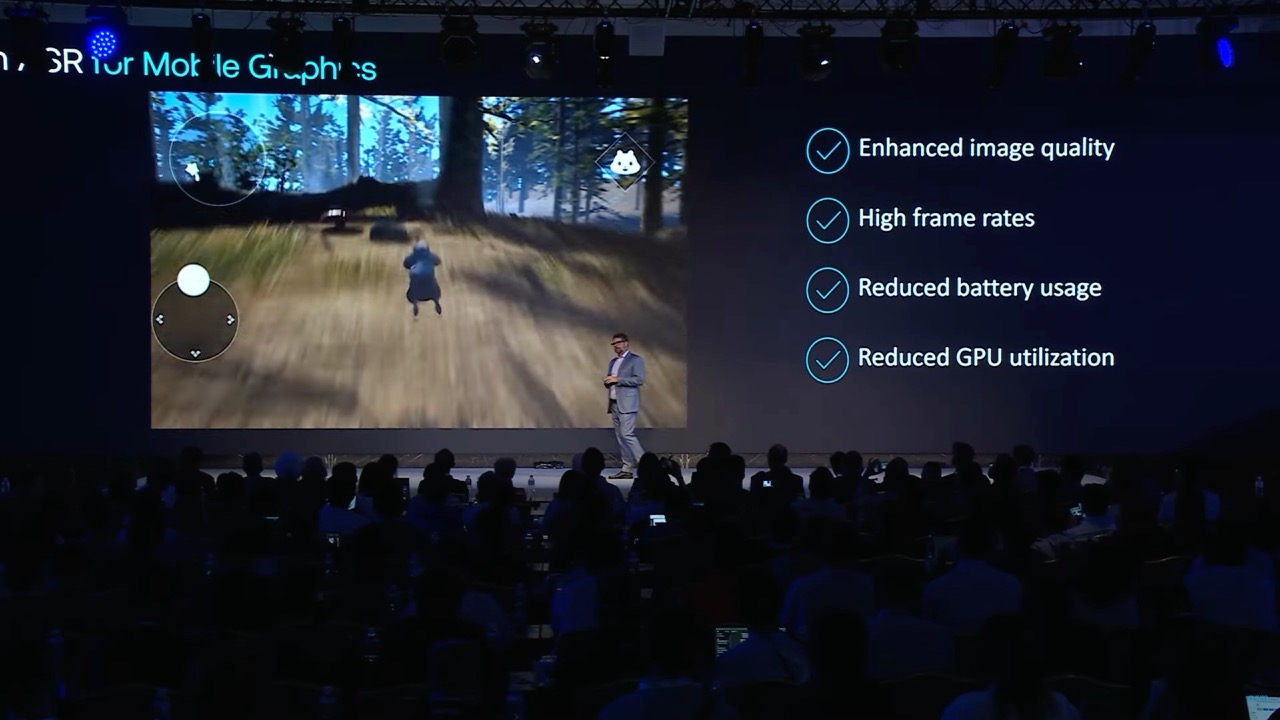

The Travis CPU will be paired with Draga, ARM's next-generation GPU designed specifically for mobile gaming and AI acceleration. Draga aims to deliver "console-level graphics" in mobile form factors, with enhanced ray tracing capabilities and support for popular game development tools like Unity and Unreal Engine.

The Ecosystem Advantage: Software Makes the Difference

One of Bergey's most telling anecdotes involved visiting AI startup companies. He described a pattern he sees repeatedly: companies start with equal numbers of hardware and software engineers, but within 12-18 months, they still have the same hardware team while their software team has grown five-fold.

This shift reflects a fundamental truth about AI development: the software challenges are enormous and growing. ARM's response has been to invest heavily in its developer ecosystem, particularly through its Kleidi AI libraries.

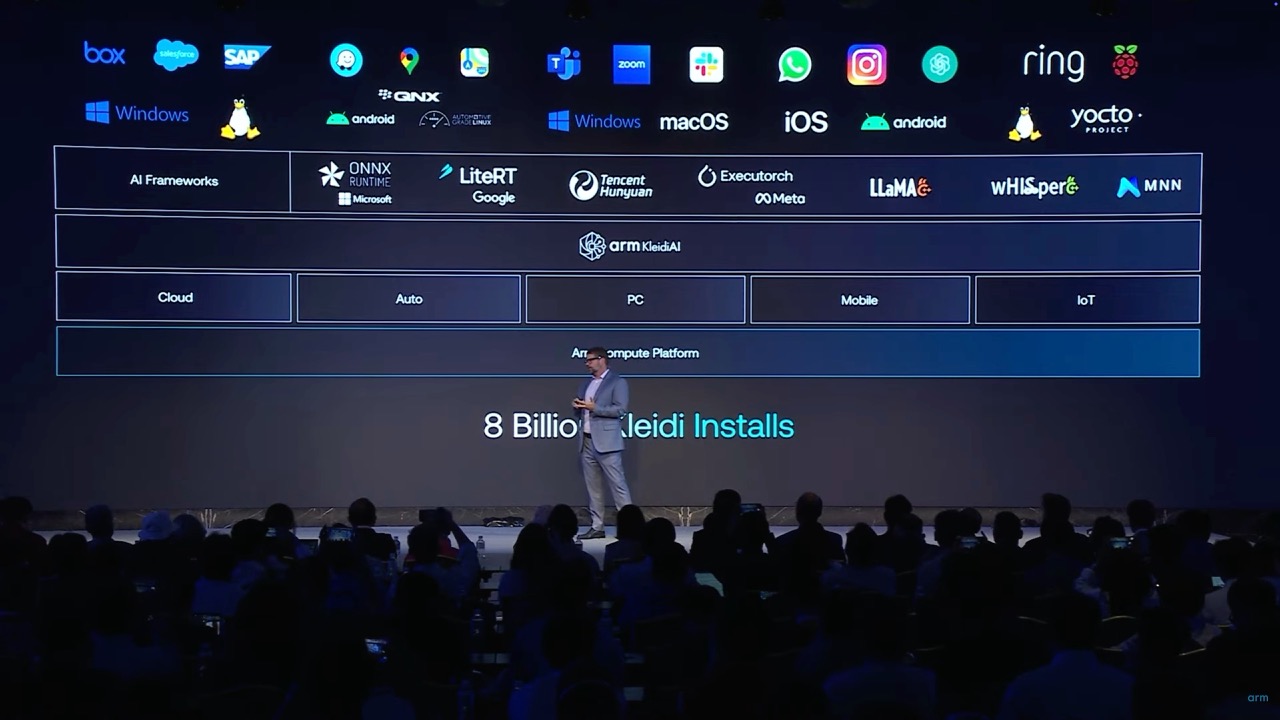

Launched at last year's COMPUTEX, Kleidi has already achieved remarkable adoption. The libraries have been integrated with major AI frameworks including Microsoft's ONNX, Google's LiteRT, Meta's Executorch, and Tencent's ncnn. More importantly, Kleidi has registered over 8 billion installs across ARM-powered devices.

This software foundation allows developers to tap into ARM's AI performance optimizations across billions of devices without having to write platform-specific code. It's the kind of ecosystem advantage that's difficult for competitors to replicate.

The Physical AI Future: Beyond Digital Assistants

Bergey's vision extends beyond traditional computing into what he calls "physical AI" - AI that can sense, interact with, and respond to the physical world. This isn't science fiction; it's already happening in manufacturing and logistics applications.

The transition from cloud-based AI assistants to edge-based AI agents represents a fundamental shift in how we interact with technology. Six months ago, most AI assistants ran entirely in the cloud. Today, new AI systems are being designed for edge deployment first, enabling experiences that simply weren't possible before.

ARM's Ray-Ban smart glasses, highlighted during the presentation, exemplify this shift. These aren't experimental devices for early adopters - they're the top-selling product in more than 60 Ray-Ban stores across Europe. The seamless, voice-activated AI interaction they enable hints at a future where AI assistance becomes as natural as conversation.

Industry Partnerships: The NVIDIA and MediaTek Perspective

Bergey was joined on stage by executives from two key ARM partners: Kevin Dearliang from NVIDIA and Adam King from MediaTek. Their perspectives provided additional insight into how the AI ecosystem is evolving.

Dearliang emphasized that 2025 will be "the year of inference," but cautioned that inference is far more complex than many initially assumed. As AI systems evolve from simple question-and-answer interactions to sophisticated agent workflows where AIs communicate with other AIs, latency becomes critical. The networking and infrastructure requirements for these systems are substantial.

King, representing MediaTek's personal devices business, highlighted the transformational impact of AI across device categories. Even three-year-old laptops perform better today than when they were new, thanks to cloud-based AI services. As workloads move to the edge, the opportunities for improving device experiences multiply.

Looking Ahead: Challenges and Opportunities

Despite ARM's optimistic vision, significant challenges remain. Power consumption continues to be a limiting factor, particularly as AI models grow larger and more sophisticated. The industry faces a talent shortage in semiconductor design and AI engineering that could constrain growth.

There's also the question of competition. Intel, AMD, and other processor manufacturers aren't standing still. The AI revolution has reset many assumptions about computing architecture, creating opportunities for both established players and new entrants.

However, ARM's ecosystem advantages are substantial. The company's three-decade focus on power efficiency has prepared it well for an era where energy consumption is a primary constraint. Its ubiquitous presence in mobile devices provides a natural foundation for edge AI deployment. And its partnerships with major cloud providers give it credibility in high-performance computing applications.

As Bergey concluded his presentation, he framed the current moment as "a once-in-a-lifetime opportunity for all of us to imagine, to design, and to deliver next-generation platforms of the future." Whether ARM can execute on its ambitious vision remains to be seen, but the company has certainly positioned itself at the center of computing's next revolution.

Recent Posts