AI Summary

Apple's Matrix3D is a novel unified model that streamlines 3D content creation from 2D images by simultaneously handling multiple photogrammetry tasks like pose estimation, depth prediction, and novel view synthesis within a single diffusion transformer architecture. It is capable of working with sparse inputs and using a mask learning strategy to handle incomplete data, offers significant efficiency and performance improvements over traditional multi-stage pipelines.

Apple

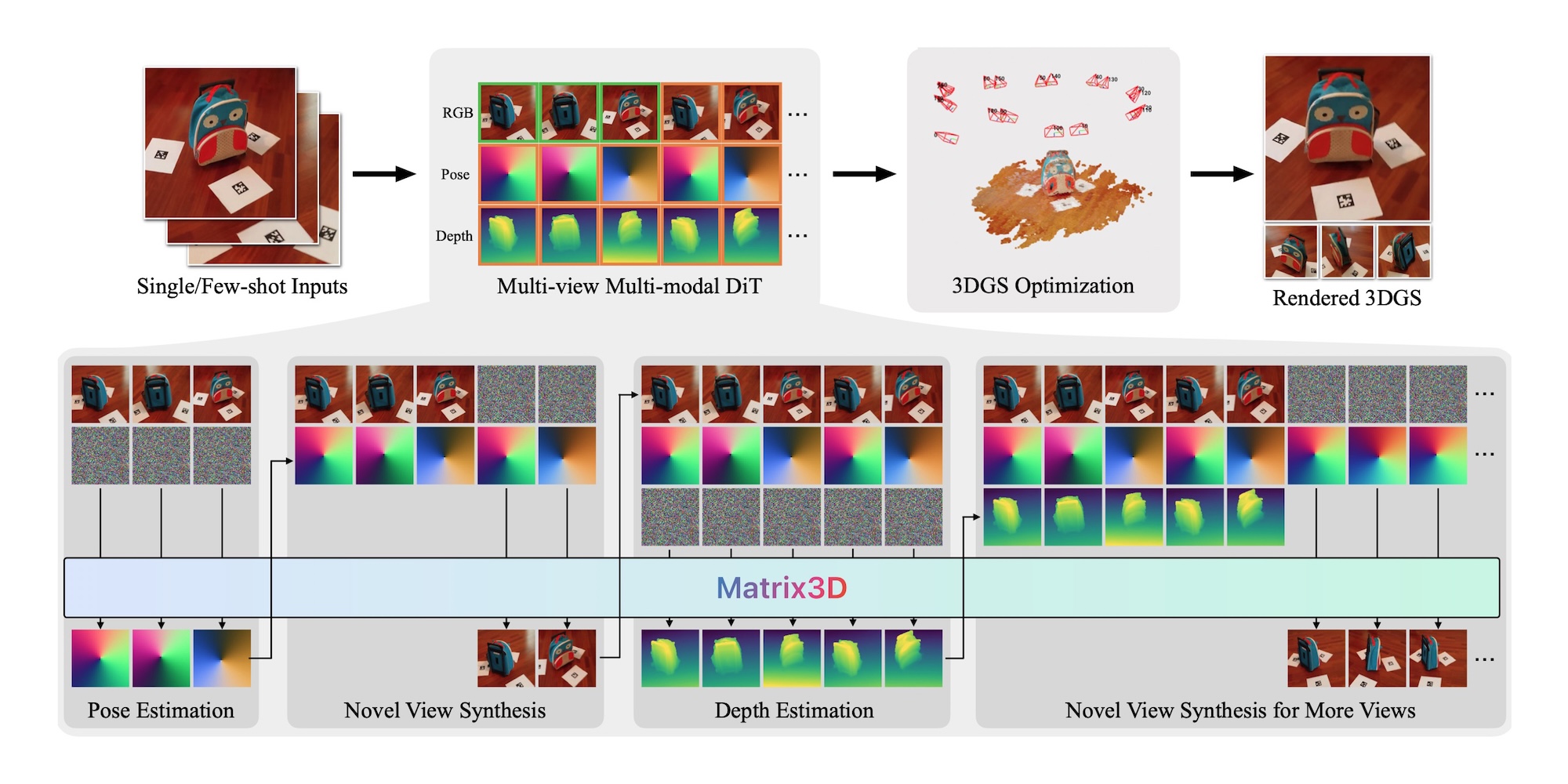

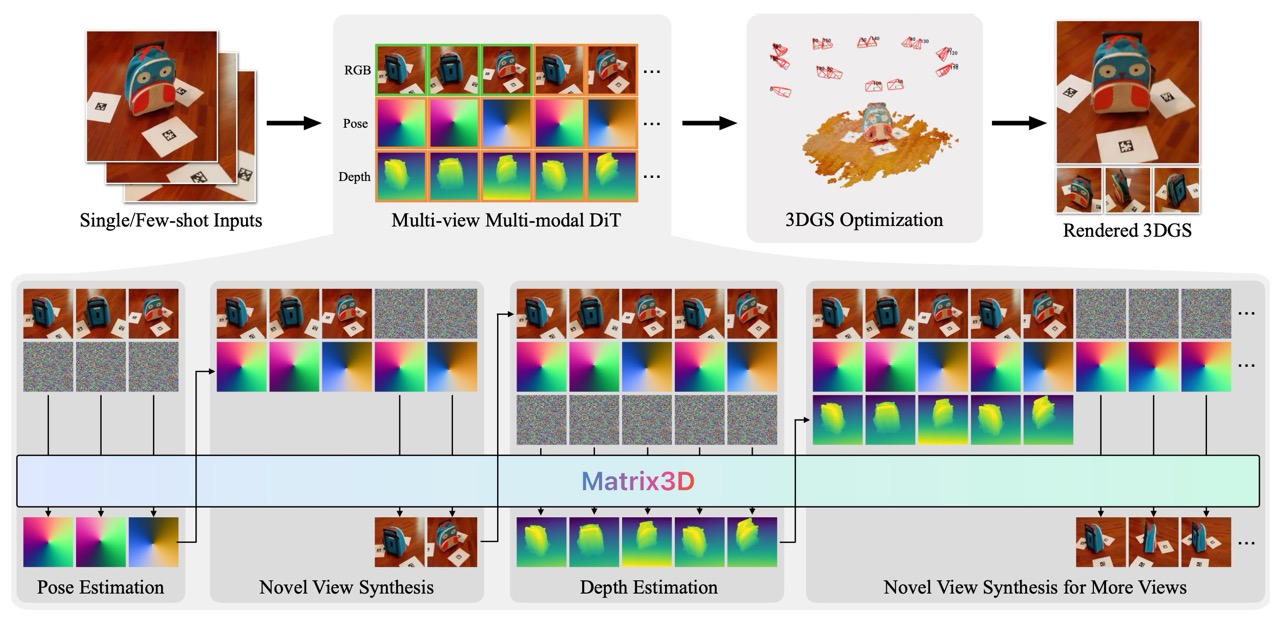

Matrix3D, an unified model that handles multiple photogrammetry tasks simultaneously. This innovation could fundamentally change how we transform 2D images into immersive 3D experiences, making the process more efficient and accessible to creators across industries.

Until now, creating 3D content from photographs has been a complex, multi-stage process requiring different specialized tools for each step. Matrix3D changes this paradigm by combining several key tasks—pose estimation, depth prediction, and novel view synthesis—into a single cohesive model.

Understanding the Technical Architecture

At its core, Matrix3D utilizes a multi-modal diffusion transformer (DiT) architecture to integrate different types of data: regular images, camera parameters, and depth maps. What makes this approach revolutionary is how these different data types are processed together, allowing the system to handle tasks that traditionally required separate specialized tools.

The technology behind Matrix3D isn't just about convenience—it represents a fundamental shift in how AI systems can approach 3D reconstruction. Traditional photogrammetry pipelines require dense collections of images and separate processing stages for features like structure-from-motion and multi-view stereo. Each step was previously handled by distinct algorithms that weren't optimized to work together.

In contrast, Matrix3D's unified approach allows it to handle sparse inputs (even single images in some cases) and process them through one coherent system, minimizing the accumulation of errors that often occurs in multi-stage processes.

The Mask Learning Strategy and Potential Applications

Real-world 3D data is often fragmented—some information might include images with camera positions but no depth information, while other data might have depth but lack precise camera parameters. Traditional systems struggle with this inconsistency.

Matrix3D overcomes this limitation by training on partially masked inputs. Similar to how humans can infer missing information based on context, Matrix3D learns to fill in gaps in the data, enabling it to train on a much wider range of datasets than would otherwise be possible.

The potential applications for Matrix3D span numerous industries where 3D content creation is essential:

- Architecture and real estate: Creating immersive virtual property tours from just a handful of photos

- Gaming and entertainment: Faster, more efficient creation of 3D assets from reference images

- E-commerce: Enabling retailers to create 3D product models from limited photography

- Cultural preservation: Digitally reconstructing historical sites and artifacts

- Augmented reality: Improving how AR systems understand and interact with real-world spaces

For creators who previously needed extensive specialized equipment and training, Matrix3D's ability to work with sparse inputs (even single images in some cases) dramatically lowers the barrier to entry for quality 3D content creation.

Performance Breakthroughs

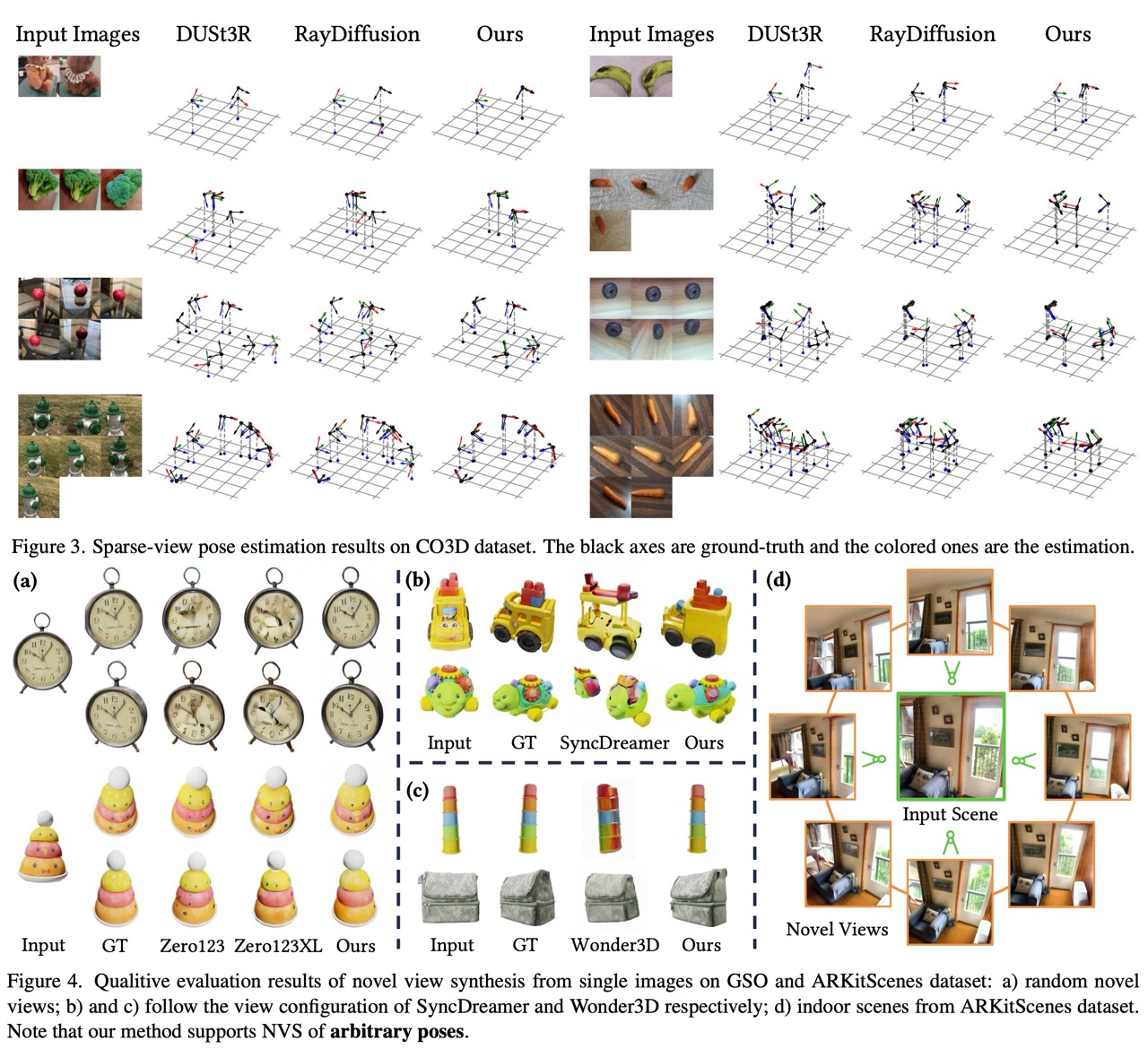

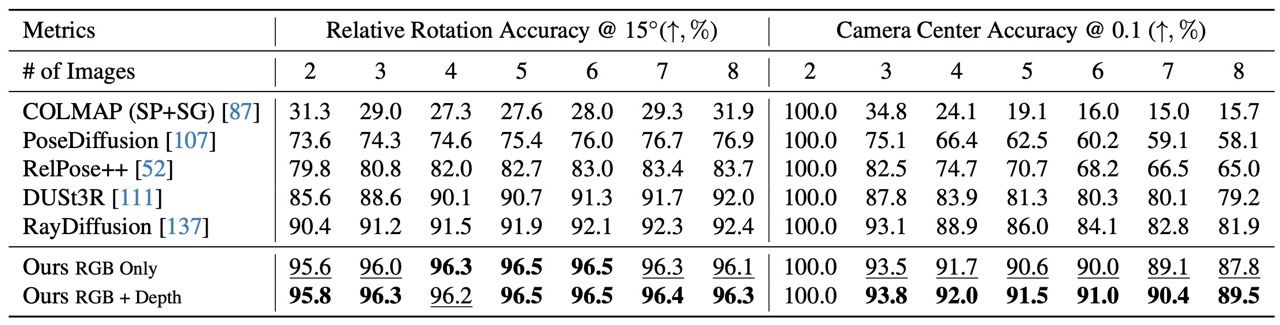

According to research paper, Matrix3D doesn't just consolidate multiple tasks—it actually outperforms many specialized tools at their own functions.

In pose estimation (determining the position and orientation of a camera), Matrix3D achieved significantly higher accuracy than previous methods, particularly when working with sparse views where traditional techniques often struggle.

For novel view synthesis (generating new perspectives of a scene), the system produced more realistic results than competing approaches, maintaining consistency across different viewpoints—a persistent challenge in 3D reconstruction.

Training Data Challenges

Creating a system like Matrix3D requires enormous amounts of diverse training data. Apple tackled this challenge by combining six major datasets covering various environments:

- Objaverse and MVImgNet: Object-centric datasets

- CO3D-v2: Common objects in 3D

- RealEstate10k: Indoor and outdoor residential spaces

- Hypersim and ARKitScenes: Large-scale indoor environments

Given the wide variance in these datasets, Apple had to implement rigorous normalization techniques to ensure consistent processing across different scales and scene types.

The Competitive Landscape

Apple isn't alone in pursuing unified approaches to 3D reconstruction. Google's NeRF-based models, NVIDIA's Instant NeRF, and various academic projects like DUSt3R from the University of Washington have made strides in this area.

What distinguishes Matrix3D is its combination of state-of-the-art performance with remarkable flexibility. While some competing systems may excel at specific tasks, Apple's approach maintains high performance across multiple functions while adding the ability to handle varying input combinations.

Despite its impressive capabilities, Matrix3D does have limitations. The computational requirements for training such a system are substantial—Apple reports using 64 NVIDIA A100 GPUs for initial training, placing this technology out of reach for all but the most well-resourced organizations.

Privacy advocates have also raised questions about technologies that can create detailed 3D models from limited inputs, though Apple has not yet detailed how they plan to address these concerns in consumer applications.

While Matrix3D is currently presented as a research project rather than a consumer product, its capabilities align with Apple's long-term vision of more immersive computing experiences. Whether we'll see these capabilities in future Apple products remains to be seen, but the technology demonstrates the company's commitment to advancing the state of the art in 3D content creation.

Research Paper:

Matrix3D: Large Photogrammetry Model All-in-OneGithub:

https://github.com/apple/ml-matrix3dRecent Posts