AI Summary

Multimodal AI combines multiple data types (text, images, audio, video) to create human-like AI systems. It replicates human perception by integrating different senses and aims to revolutionize various industries such as customer service, media, entertainment, medicine, and accessibility. With rapid advances and estimated $8.4 billion market growth by 2030, multimodal AI has the potential to transform industries with innovative products like wearable devices and smart glasses, but also presents challenges in data requirements, alignment, deployment complexity, hallucinations, bias, security, and ethics.

Multimodal AI is an emerging field that combines multiple types of data, such as text, images, audio, and video, to create more sophisticated and human-like AI systems. This approach mimics how humans perceive and interact with the world, using various senses to gather and process information. As AI continues to evolve, multimodal AI is poised to become the next frontier, offering new opportunities and challenges for businesses and researchers alike.

The Power of Multimodality

Humans have always relied on multiple senses to learn about and navigate the world. We combine visual, auditory, and tactile information to form a comprehensive understanding of our surroundings. Multimodal AI aims to replicate this process by integrating different data types into a single AI model.

According to Jina AI CEO Han Xiao, "Communication between humans is multimodal. They use text, voice, emotions, expressions, and sometimes photos." As AI continues to advance, it is likely that future human-machine interactions will also be multimodal, enabling more natural and intuitive communication.

How Multimodal AI Works

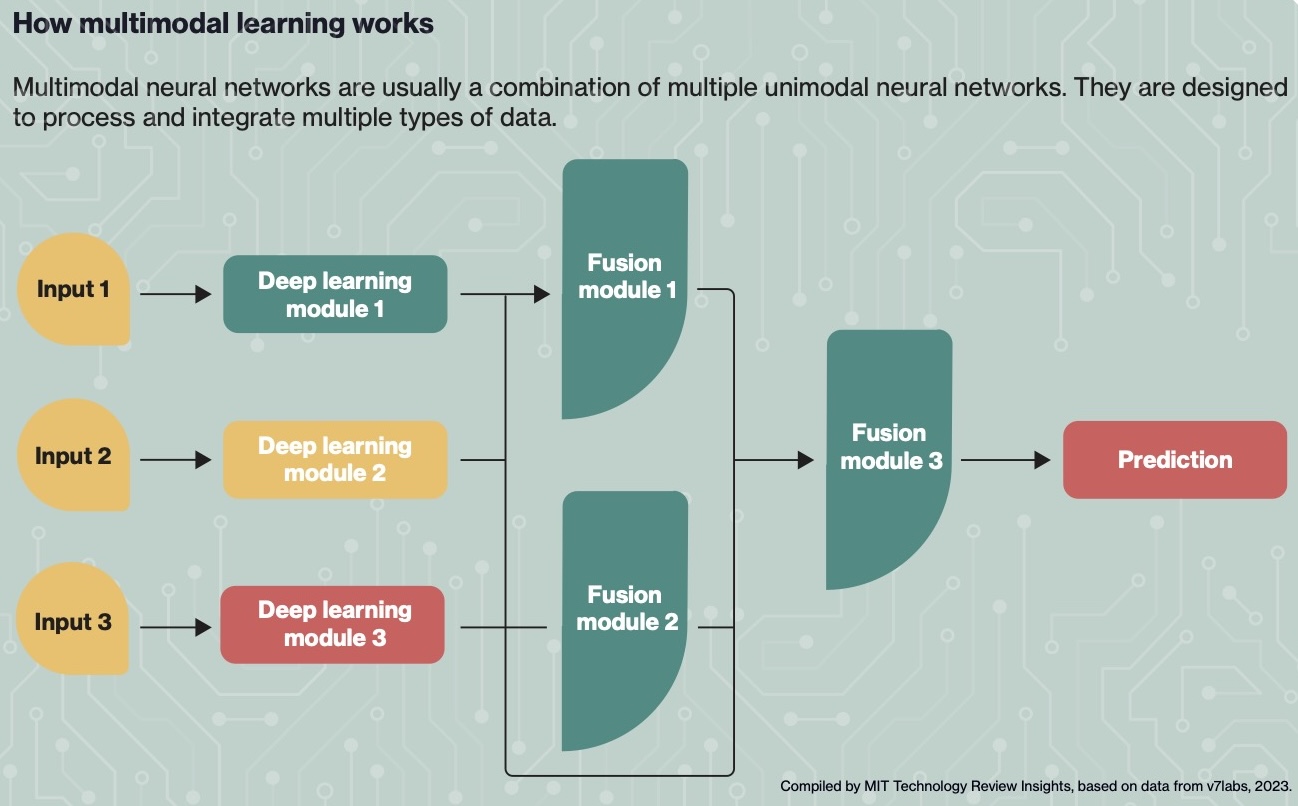

Multimodal AI models typically consist of several unimodal models, each designed to process a specific type of data. For example, a text model might use words as tokens for embedding, while an image model uses pixels. These unimodal models are then combined through a process called fusion, which aligns the elements of each model to create a multifaceted representation of reality.

Fusion is a complex technological challenge, as researchers must find ways to align human knowledge across different modalities, such as text, visuals, audio, and 3D mesh. Despite these challenges, rapid advances have been made in recent years, and basic multimodal AI models are now widely available.

Think of it this way: instead of just reading about a tree, AI could also see its image and hear the rustle of its leaves, creating a deeper, more comprehensive understanding. This has massive potential across industries:

- Customer service: Chatbots that understand not just your words but also your tone of voice and facial expressions.

- Media and entertainment: AI tools that automatically translate videos, generate realistic animations, and personalize content based on your preferences.

- Medicine: Systems that analyze medical images and patient data to provide more accurate diagnoses and treatment plans.

- Accessibility: Tools that help visually impaired individuals navigate the web by describing images and videos.

Applications and Opportunities

The market for multimodal AI is expected to explode, reaching an estimated $8.4 billion by 2030, according to KBV Research estimates. Multimodal AI has the potential to transform various industries, from media and entertainment to healthcare and customer service. We're already seeing innovative products and services emerge:

- Humane AI Pin: This device takes wearables to a whole new level. It combines a laser projector, a depth sensor, and a powerful AI engine to project information onto any surface, transforming your hand into a dynamic interface. Imagine answering calls, seeing notifications, or even getting directions directly projected onto your palm! The Pin's ability to understand voice commands, gestures, and visual cues makes it a truly multimodal marvel.

- Meta Ray-Ban Smart Glasses: These stylish glasses do much more than just protect your eyes. Equipped with built-in cameras and microphones, they allow you to capture photos and videos, listen to music, and even take calls, all hands-free. The AI integration takes things further, enabling real-time language translation, object recognition, and information retrieval based on what you see.

- Rabbit R1: This personal AI assistant goes beyond simple voice commands. It acts as a central hub for all your apps, allowing you to interact with them using natural language. Need to book a flight, order groceries, and schedule a meeting? Just tell Rabbit R1, and it will take care of it all by seamlessly navigating between different apps and services. This multimodal approach streamlines your digital life and saves you precious time.

- AI-powered Media and Entertainment: Multimodal AI can help create multilingual content by automatically translating and dubbing videos, adjusting the visuals to appear natural rather than dubbed. Imagine changing the language spoken in a video while keeping the speaker's voice and lip movements perfectly synced. This is becoming a reality thanks to multimodal AI, offering exciting possibilities for the entertainment and media industry.

- Accessibility: Multimodal AI-enabled tools can assist individuals with visual impairments by providing alt-text descriptions of images and videos on webpages, making the internet more accessible.

As multimodal AI continues to develop, the number of use cases and product innovations will only grow, presenting significant opportunities for businesses to improve user experiences and create new revenue streams.

Challenges and Complexities

While multimodal AI offers numerous benefits, it also presents a new set of challenges and complexities. Some of the key issues include:

- Data Requirements: Multimodal AI models require vast amounts of data, often more than unimodal models. This can be a significant barrier for companies without access to large, aligned datasets.

- Alignment: Ensuring proper alignment between different modalities within a model remains a significant challenge, limiting the number of companies that can build foundational multimodal models.

- Deployment Complexity: Deploying multimodal AI can be more complicated than unimodal models, requiring specialized software and a learning curve for users to effectively utilize multimodal prompts.

- Hallucinations: Multimodal AI can exacerbate the issue of hallucinations, where AI models produce confident but false outputs. Detecting and mitigating these errors is more challenging in multimodal systems.

- Bias and Ethics: As with unimodal AI, multimodal models can inherit biases from their training data and be vulnerable to malicious use, raising important ethical concerns. One way to address this are the ethical and copyright filters applied on many models. These, however, can bring their own unintended risks.

- Security: The increased complexity and data requirements of multimodal AI models can create additional security vulnerabilities, making it crucial for companies to prioritize robust security measures. Even in unimodal AI, it is difficult to rule out that company data may be vulnerable to hacking. With the greater volume of data and complexity required for multimodal AI, the number of possible attack vectors increases.

Multimodal AI represents the next frontier in AI, offering the potential to create more human-like, intuitive, and powerful AI systems. As the technology continues to advance, businesses across various industries will need to navigate the complexities and challenges associated with multimodal AI to harness its full potential.

2024 will be ‘The year of AI glasses’

The glasses’ “ability to take live data ingested visually and translate that into actionable text or recommendations is going to be increasingly exciting,” says Henry Ajder, Founder, Latent Space. “It is something that people will use.” He is not alone: Computerworld says “2024 will be ‘The year of AI glasses.’”

Despite the challenges, the benefits of multimodal AI are clear, and companies that successfully implement this technology will be well-positioned to create innovative products, improve user experiences, and drive growth in the years to come. As Henry Ajder, founder of AI consultancy Latent Space, notes, "Multimodal has clear benefits and advantages, but there's no sugarcoating the complexities it brings." Embracing these complexities and investing in the development of responsible, robust multimodal AI systems will be key to unlocking the full potential of this exciting new frontier.

Full Report: MIT Technology Review Insight - Multimodal: AI’s new frontier

Recent Posts